APIs and web scraping

Extracting online content with R

Andrew Heiss

September 12, 2022

Plan for today

- Working with raw data

- Pre-built API packages

- Accessing APIs yourself

- Scraping websites

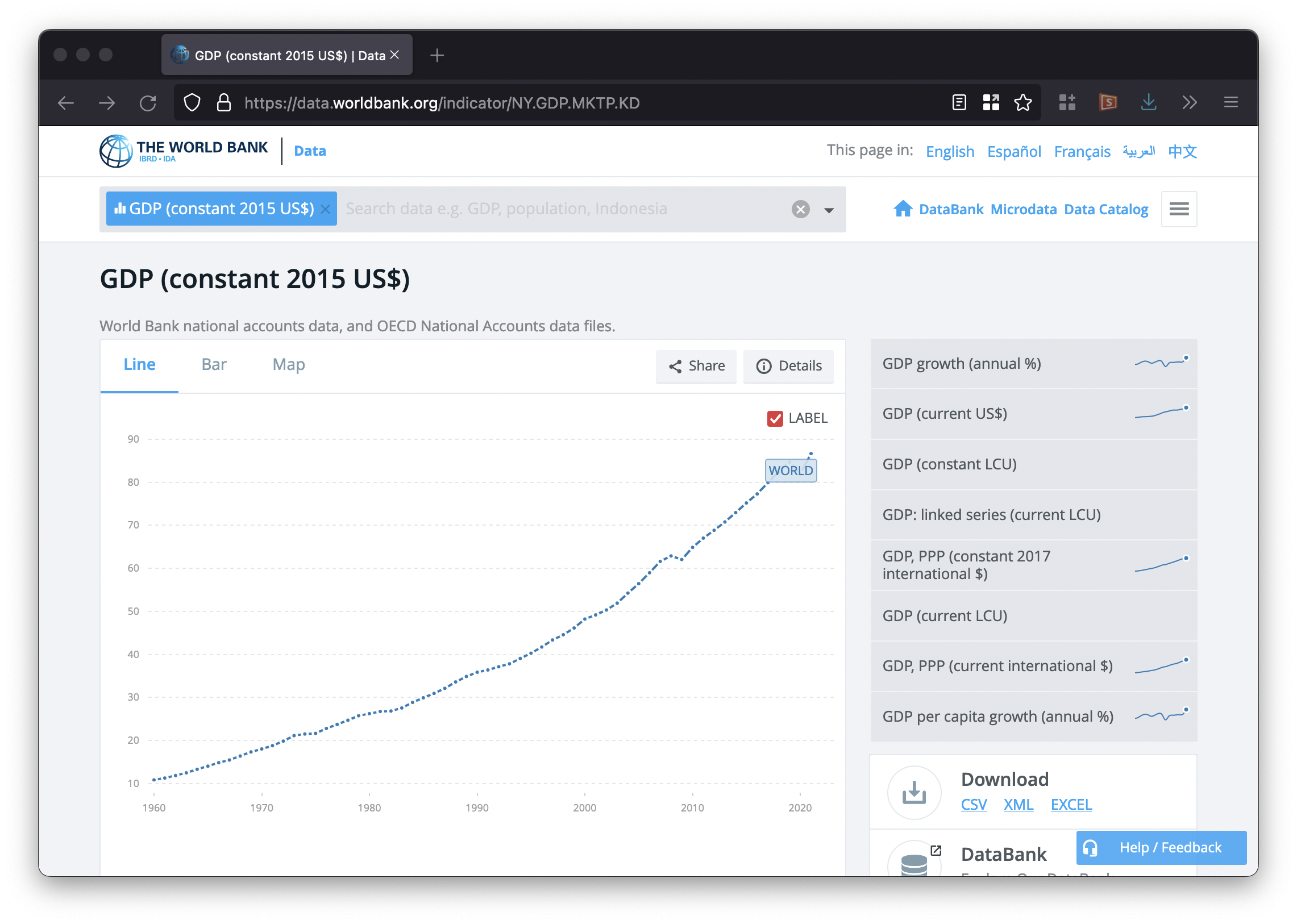

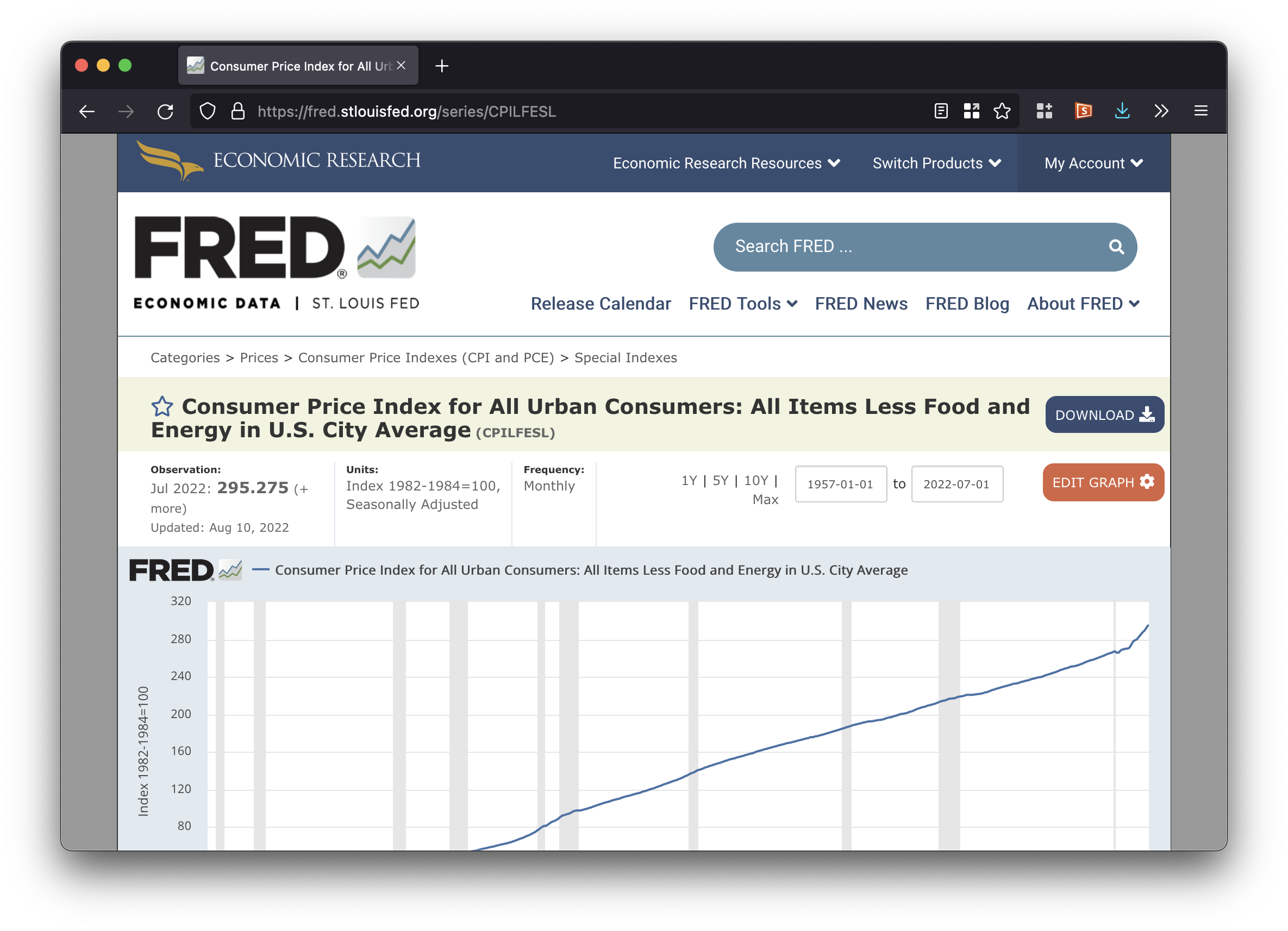

Working with raw data

Finding data online

- Data is everywhere online!

- Often provided as CSV or Excel files

- Read the file into R and do stuff with it

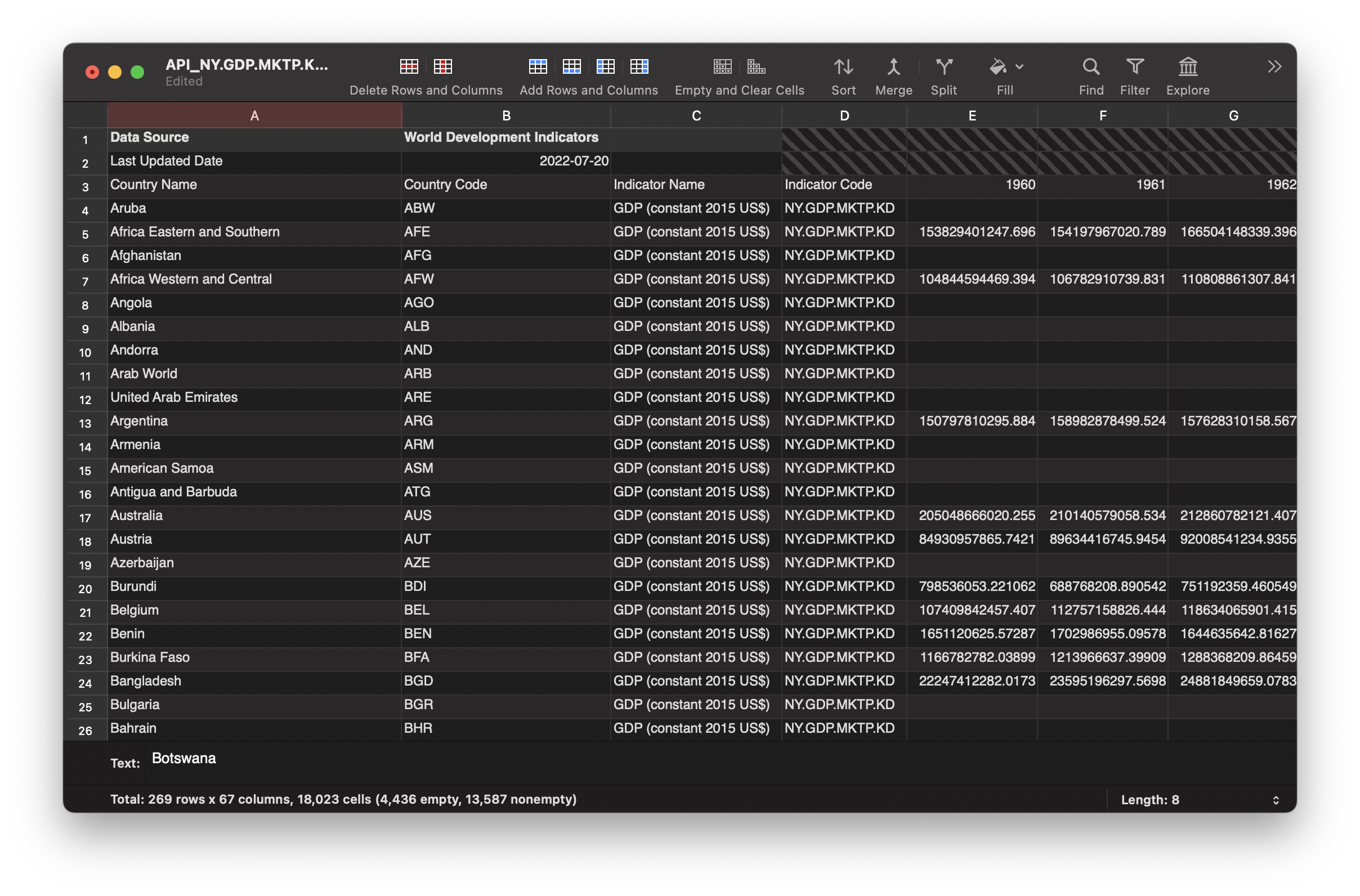

## # A tibble: 6 × 67

## Country Nam…¹ Count…² Indic…³ Indic…⁴ `1960` `1961` `1962` `1963`

## <chr> <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl>

## 1 Aruba ABW GDP (c… NY.GDP… NA NA NA NA

## 2 Africa Easte… AFE GDP (c… NY.GDP… 1.54e11 1.54e11 1.67e11 1.75e11

## 3 Afghanistan AFG GDP (c… NY.GDP… NA NA NA NA

## 4 Africa Weste… AFW GDP (c… NY.GDP… 1.05e11 1.07e11 1.11e11 1.19e11

## 5 Angola AGO GDP (c… NY.GDP… NA NA NA NA

## 6 Albania ALB GDP (c… NY.GDP… NA NA NA NA

## # … with 59 more variables: `1964` <dbl>, `1965` <dbl>, `1966` <dbl>,

## # `1967` <dbl>, `1968` <dbl>, `1969` <dbl>, `1970` <dbl>, `1971` <dbl>,

## # `1972` <dbl>, `1973` <dbl>, `1974` <dbl>, `1975` <dbl>, `1976` <dbl>,

## # `1977` <dbl>, `1978` <dbl>, `1979` <dbl>, `1980` <dbl>, `1981` <dbl>,

## # `1982` <dbl>, `1983` <dbl>, `1984` <dbl>, `1985` <dbl>, `1986` <dbl>,

## # `1987` <dbl>, `1988` <dbl>, `1989` <dbl>, `1990` <dbl>, `1991` <dbl>,

## # `1992` <dbl>, `1993` <dbl>, `1994` <dbl>, `1995` <dbl>, …wdi_clean <- wdi_raw %>%

select(-`Indicator Name`, -`Indicator Code`) %>%

pivot_longer(-c(`Country Name`, `Country Code`)) %>%

mutate(year = as.numeric(name)) %>%

filter(year >= 2010, `Country Code` %in% c("MYS", "IDN", "SGP"))

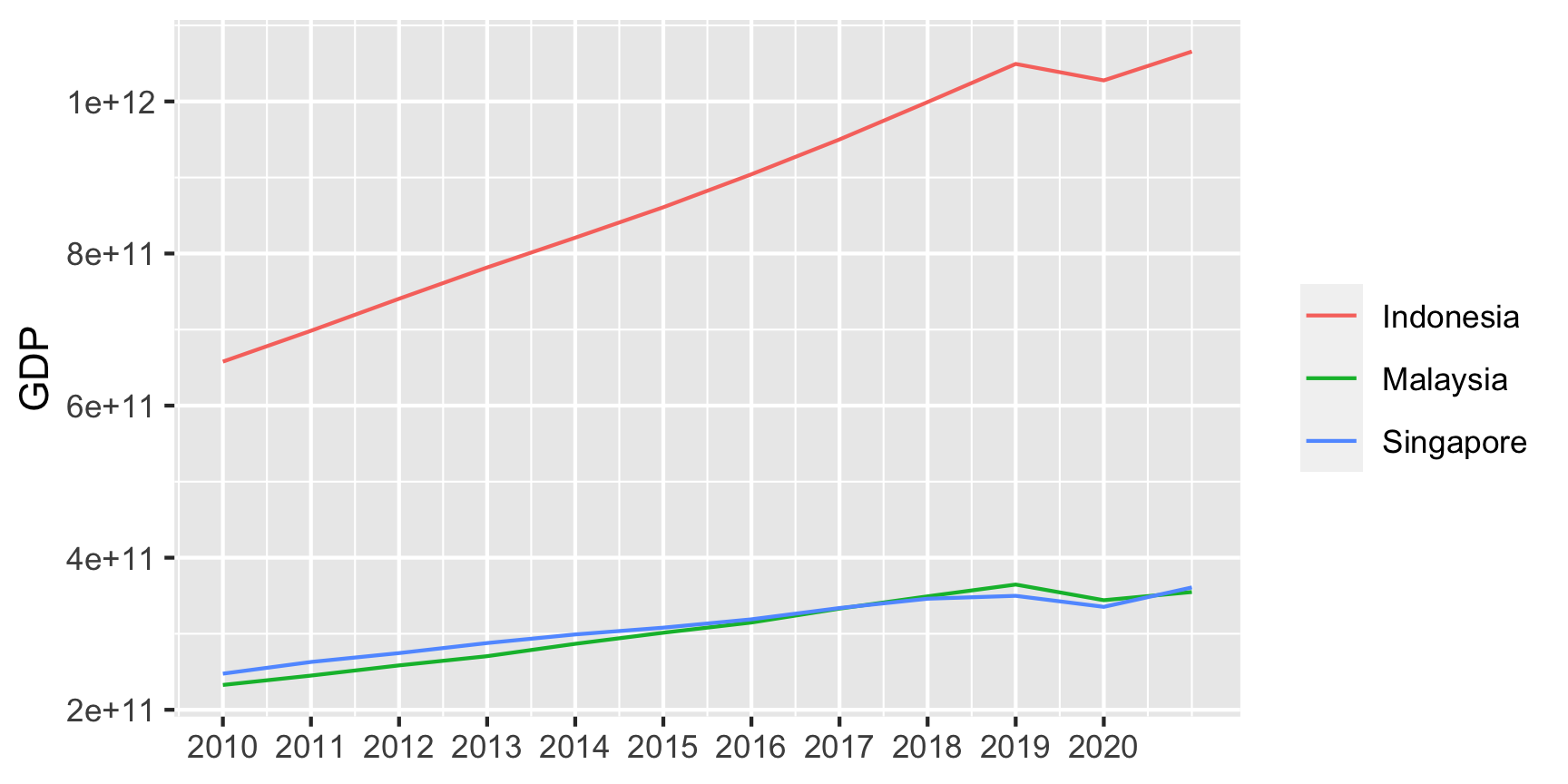

head(wdi_clean)# A tibble: 6 × 5

`Country Name` `Country Code` name value year

<chr> <chr> <chr> <dbl> <dbl>

1 Indonesia IDN 2010 657835435591. 2010

2 Indonesia IDN 2011 698422462409. 2011

3 Indonesia IDN 2012 740537690665. 2012

4 Indonesia IDN 2013 781691322851. 2013

5 Indonesia IDN 2014 820828015499. 2014

6 Indonesia IDN 2015 860854235065. 2015

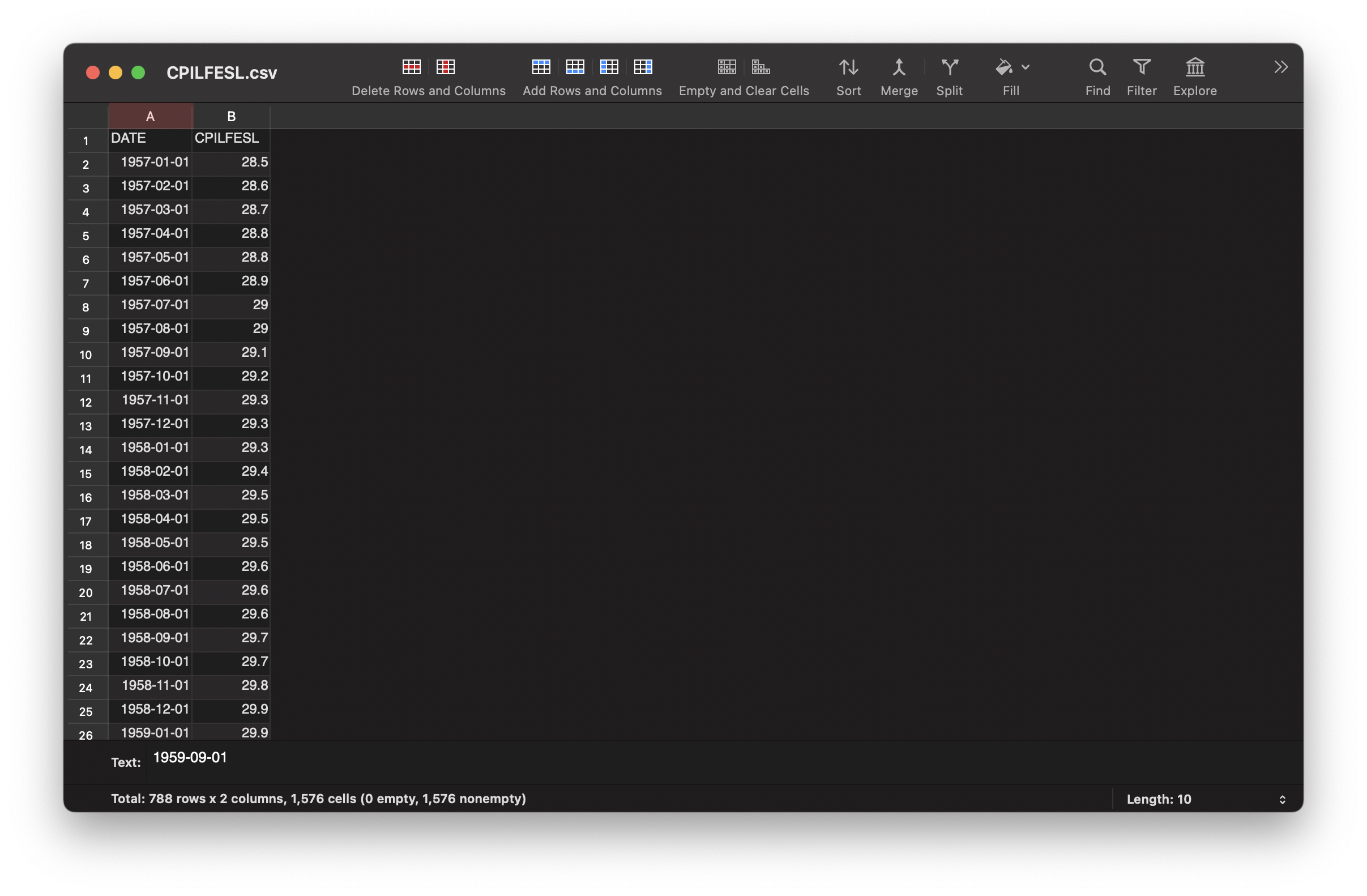

## # A tibble: 6 × 2

## DATE CPILFESL

## <date> <dbl>

## 1 1957-01-01 28.5

## 2 1957-02-01 28.6

## 3 1957-03-01 28.7

## 4 1957-04-01 28.8

## 5 1957-05-01 28.8

## 6 1957-06-01 28.9

Your turn!

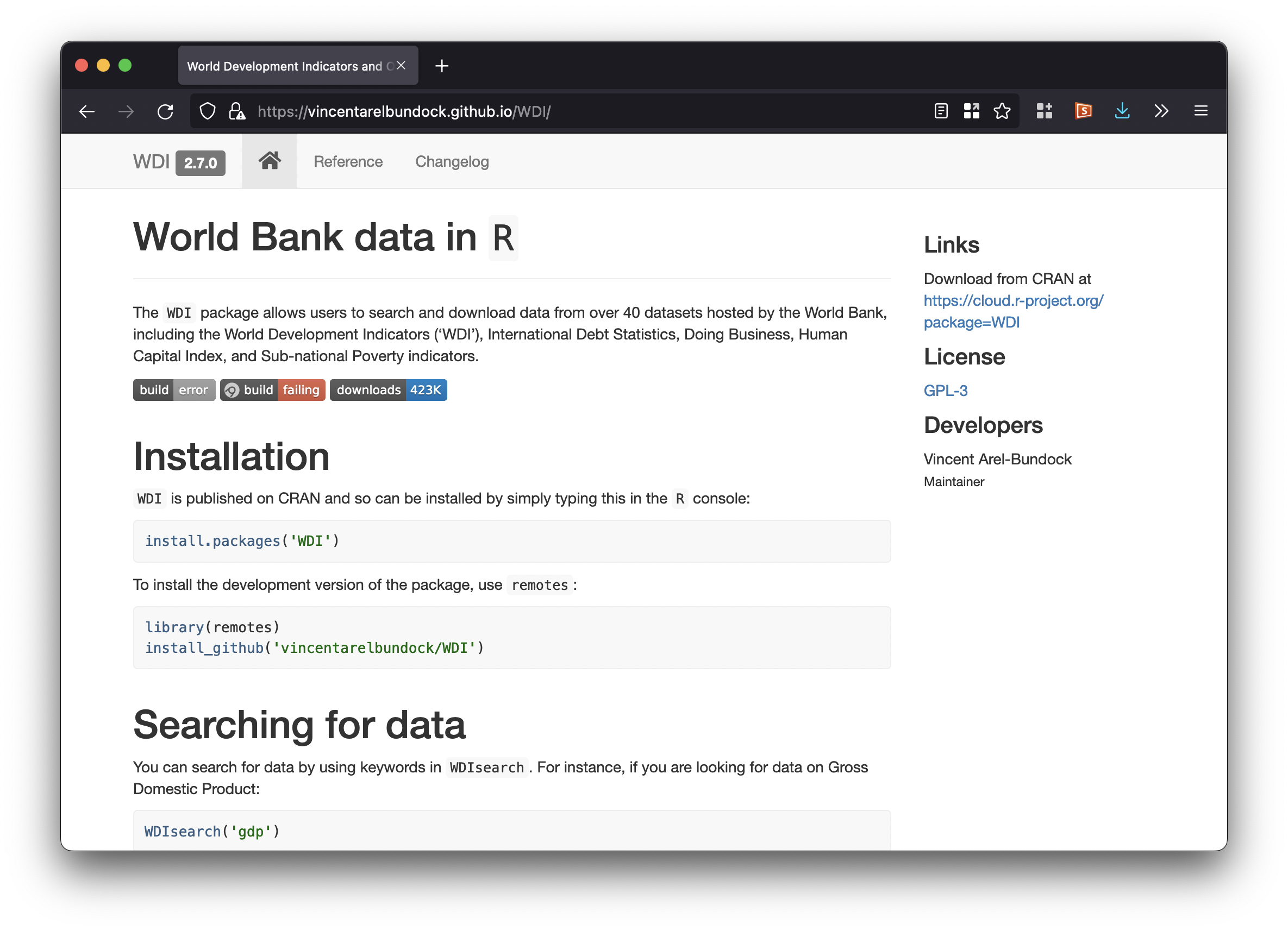

Pre-built API packages

Avoid extra work!

- Try to avoid downloading raw data files whenever possible!

- Many data-focused websites provide more direct access to data through an application programming interface, or API

- Big list of public data APIs

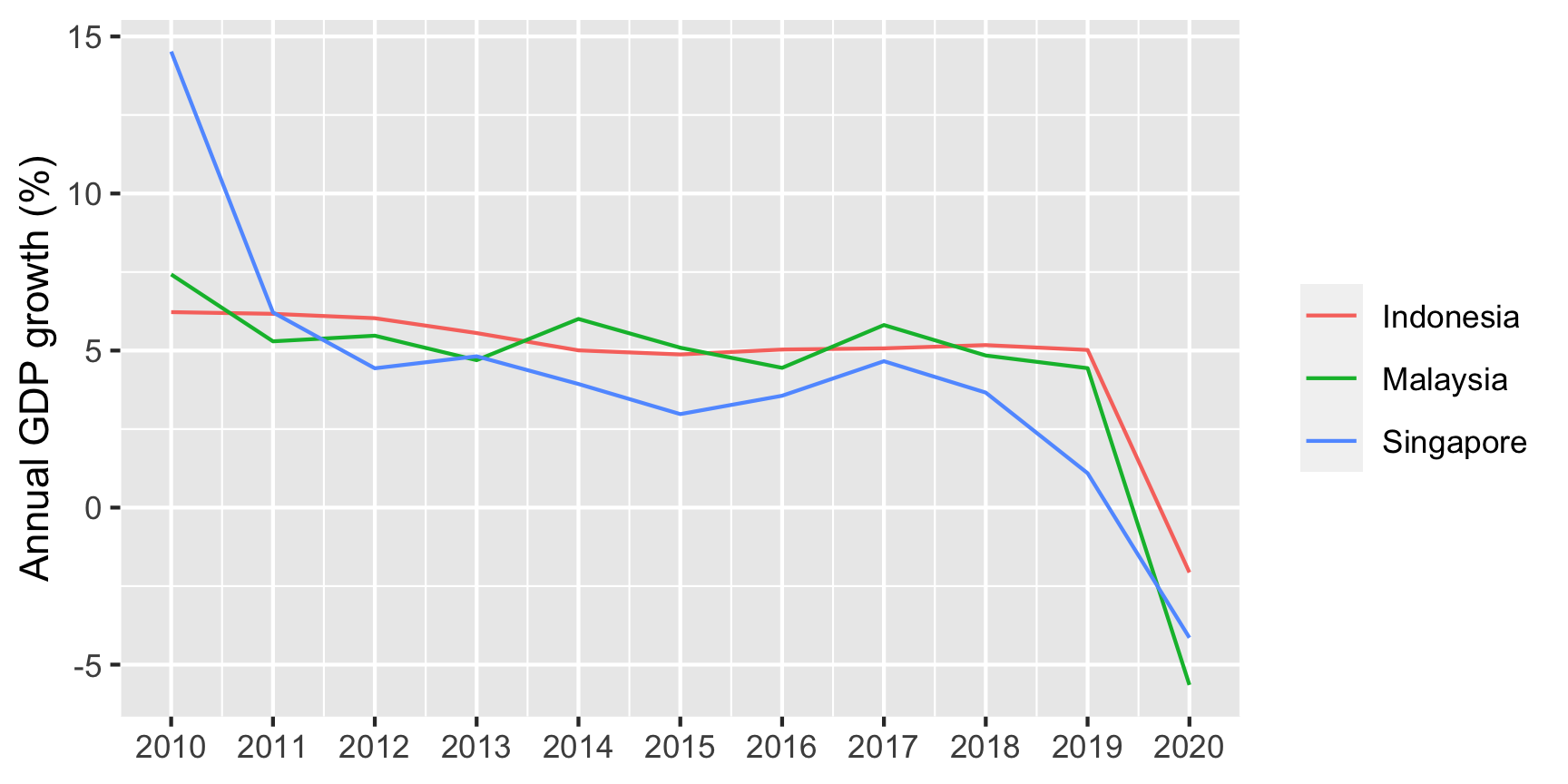

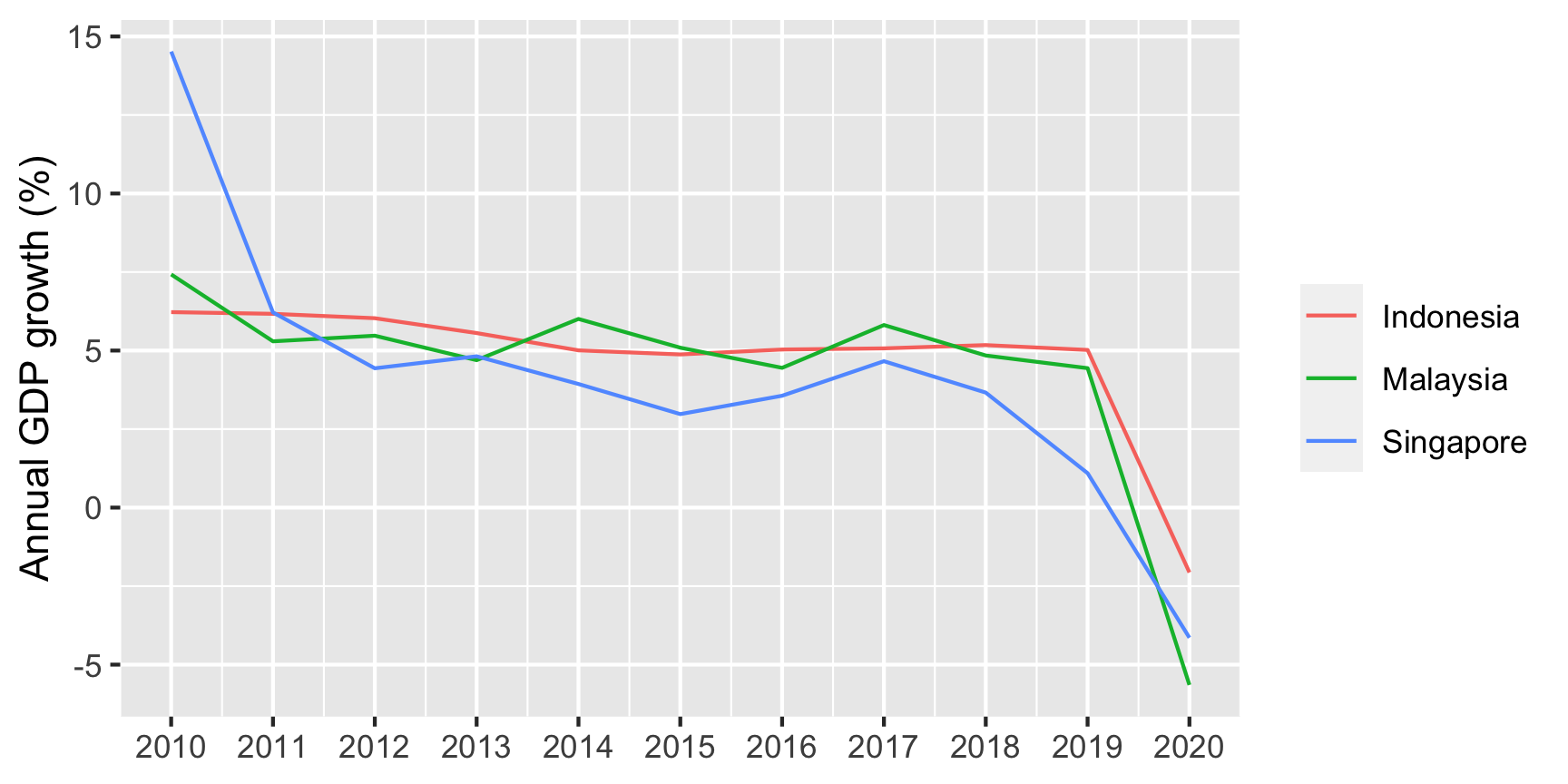

library(WDI)

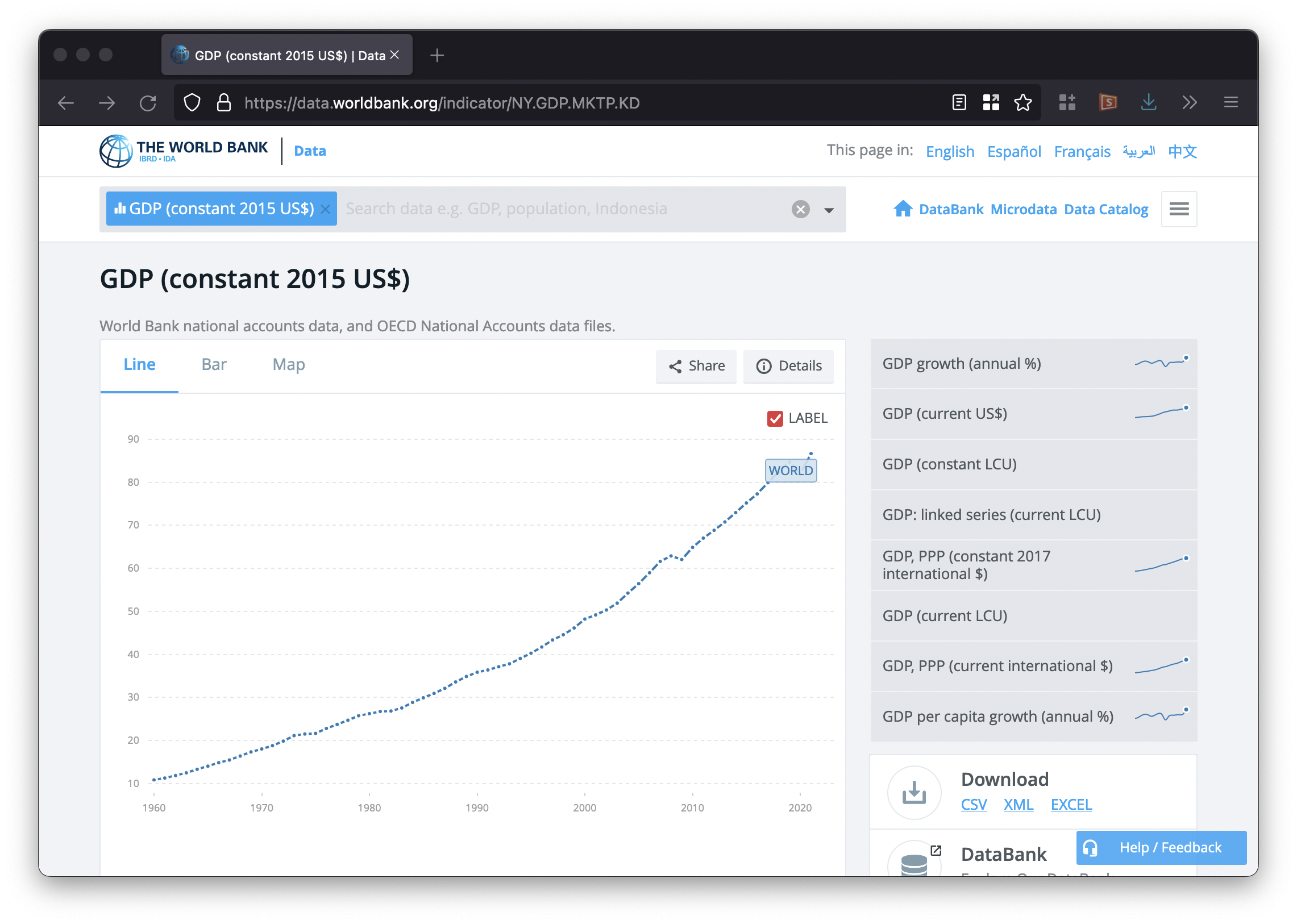

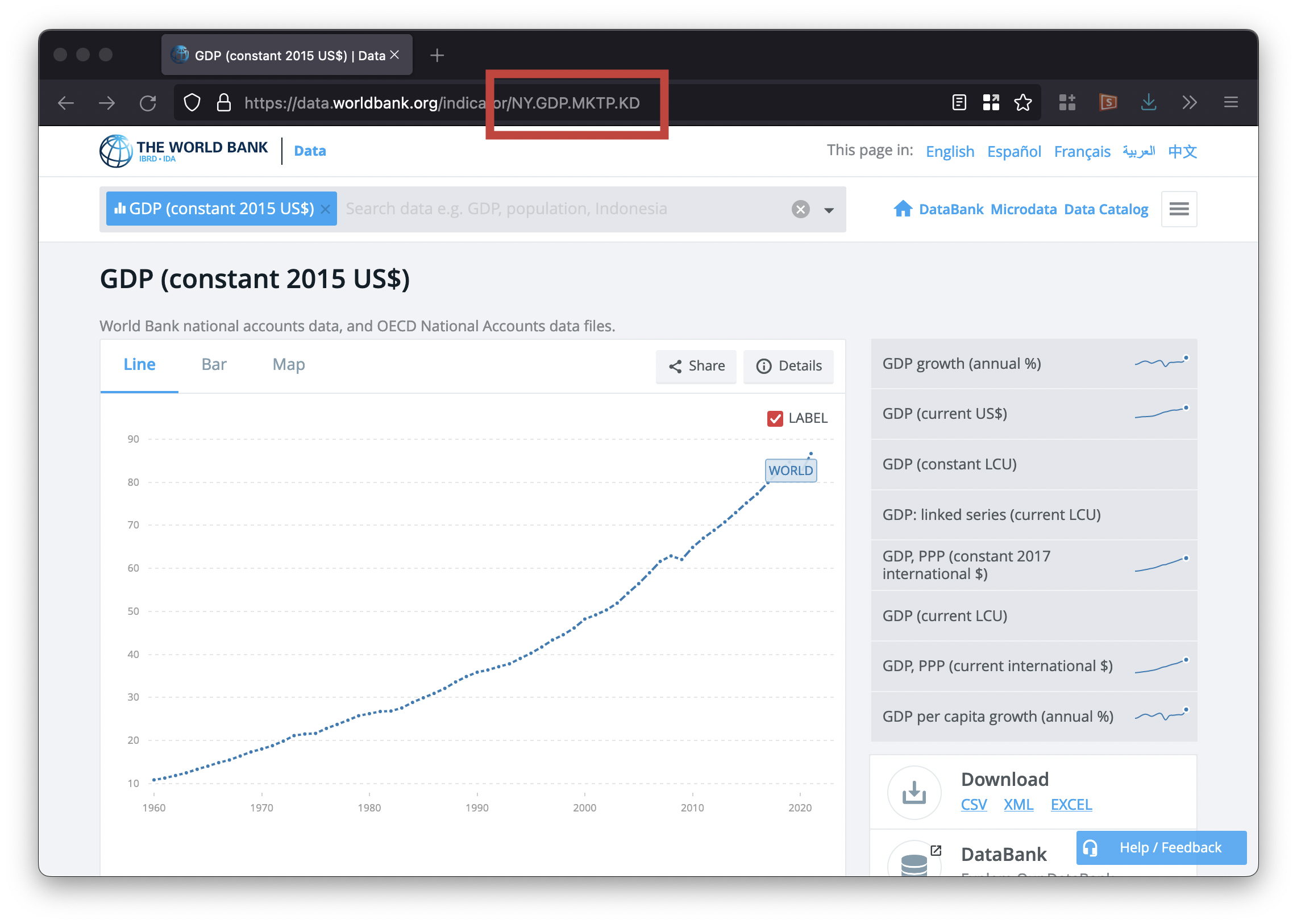

data <- WDI(country = c("MY", "ID", "SG"),

indicator = c("NY.GDP.MKTP.KD", # GDP, 2015 USD

"NY.GDP.MKTP.KD.ZG"), # GDP growth, annual %

extra = TRUE, # Population, region, and other helpful columns

start = 2010,

end = 2020)

head(data)

## # A tibble: 6 × 12

## iso2c country year NY.GD…¹ NY.GD…² iso3c region capital longi…³ latit…⁴

## <chr> <chr> <int> <dbl> <dbl> <chr> <chr> <chr> <chr> <chr>

## 1 ID Indones… 2010 6.58e11 6.22 IDN East … Jakarta 106.83 -6.197…

## 2 ID Indones… 2011 6.98e11 6.17 IDN East … Jakarta 106.83 -6.197…

## 3 ID Indones… 2012 7.41e11 6.03 IDN East … Jakarta 106.83 -6.197…

## 4 ID Indones… 2013 7.82e11 5.56 IDN East … Jakarta 106.83 -6.197…

## 5 ID Indones… 2014 8.21e11 5.01 IDN East … Jakarta 106.83 -6.197…

## 6 ID Indones… 2015 8.61e11 4.88 IDN East … Jakarta 106.83 -6.197…

## # … with 2 more variables: income <chr>, lending <chr>, and abbreviated

## # variable names ¹NY.GDP.MKTP.KD, ²NY.GDP.MKTP.KD.ZG, ³longitude,

## # ⁴latitude

library(WDI)

data <- WDI(country = c("MY", "ID", "SG"),

indicator = c(gdp = "NY.GDP.MKTP.KD", # GDP, 2015 USD

gdp_growth = "NY.GDP.MKTP.KD.ZG"), # GDP growth, annual %

extra = TRUE, # Population, region, and other helpful columns

start = 2010,

end = 2020)

head(data)

## # A tibble: 6 × 6

## iso2c country year gdp gdp_growth others

## <chr> <chr> <int> <dbl> <dbl> <chr>

## 1 ID Indonesia 2010 657835435591. 6.22 ...

## 2 ID Indonesia 2011 698422462409. 6.17 ...

## 3 ID Indonesia 2012 740537690665. 6.03 ...

## 4 ID Indonesia 2013 781691322851. 5.56 ...

## 5 ID Indonesia 2014 820828015499. 5.01 ...

## 6 ID Indonesia 2015 860854235065. 4.88 ...

Your turn!

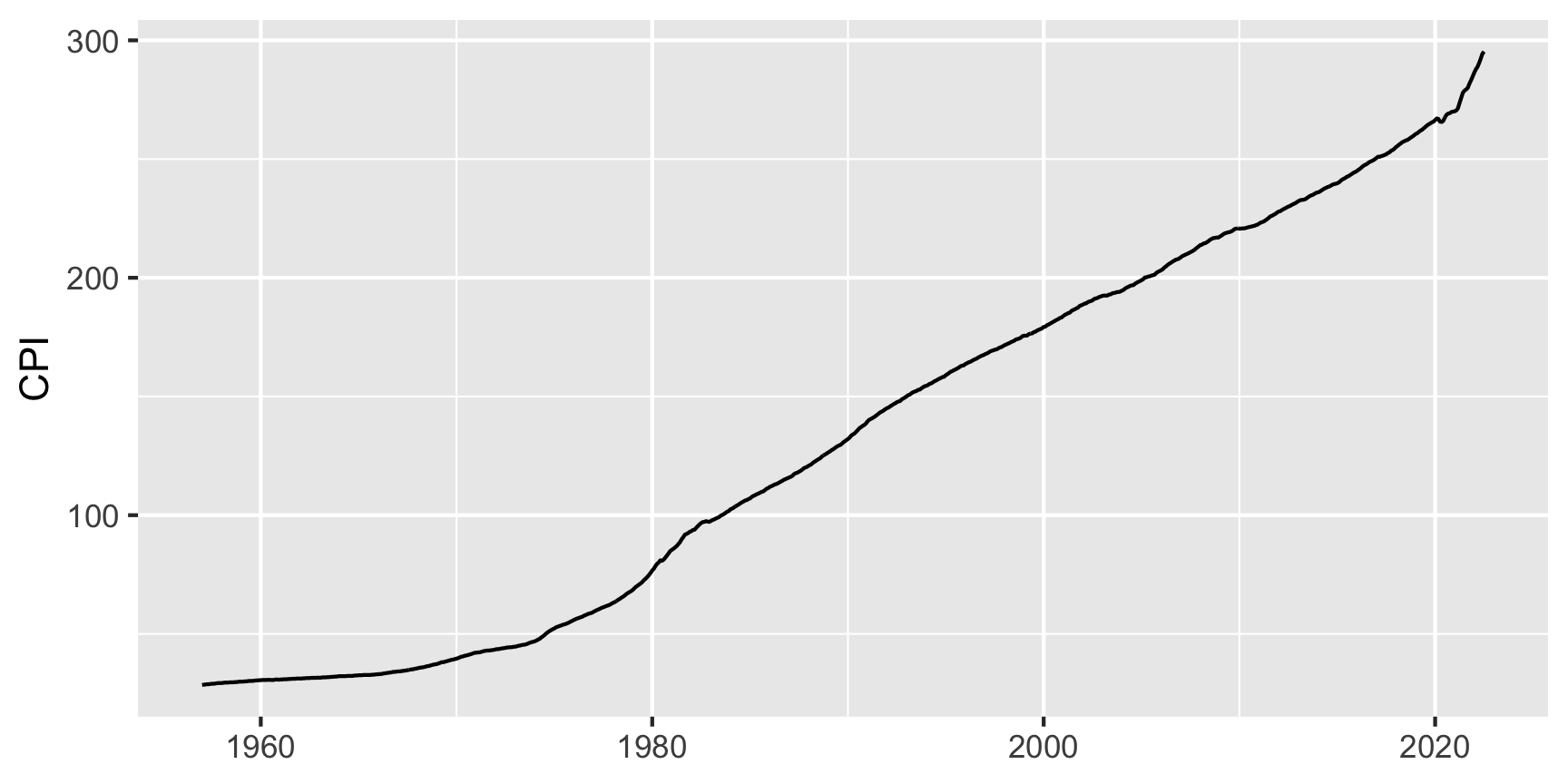

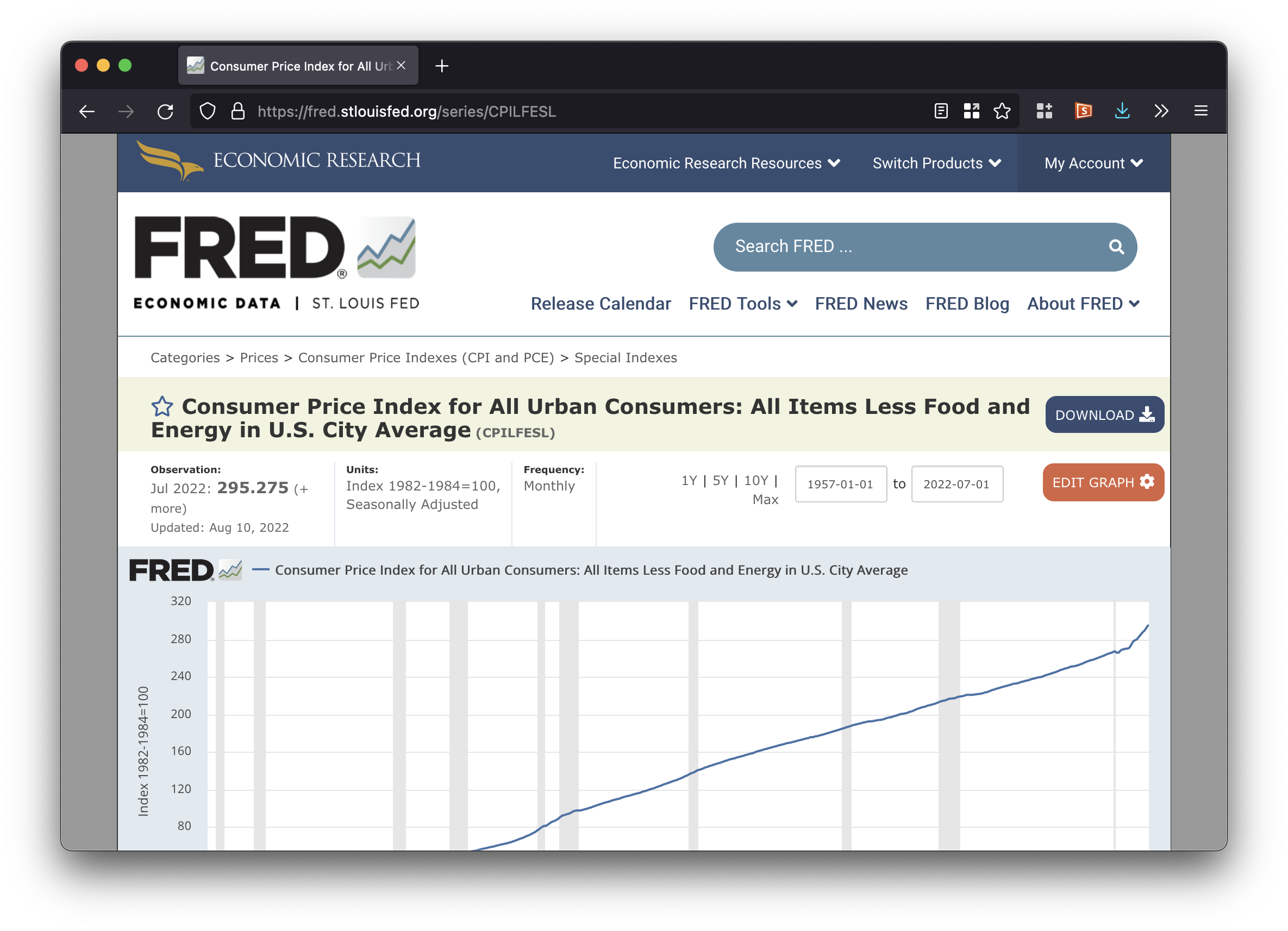

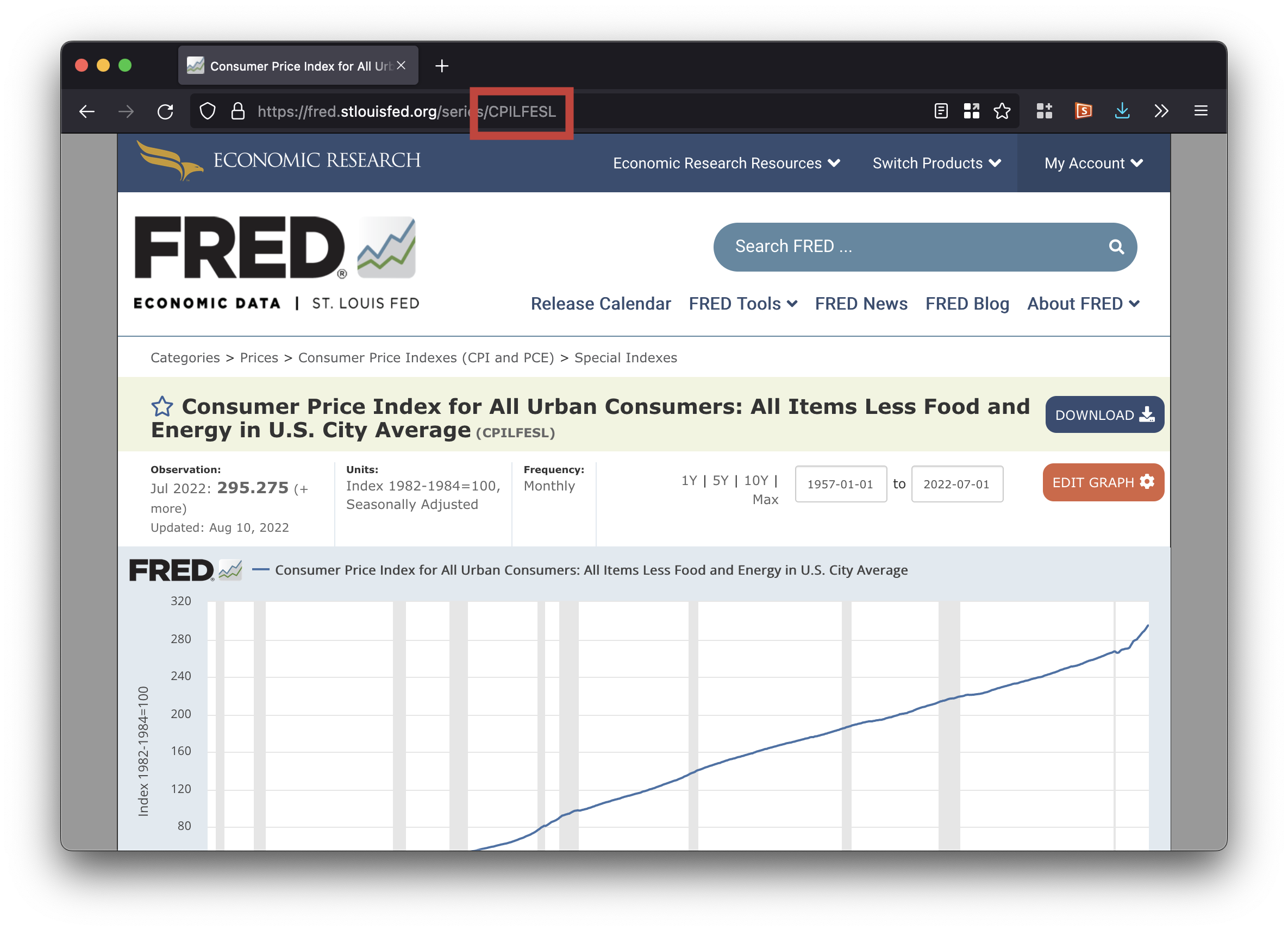

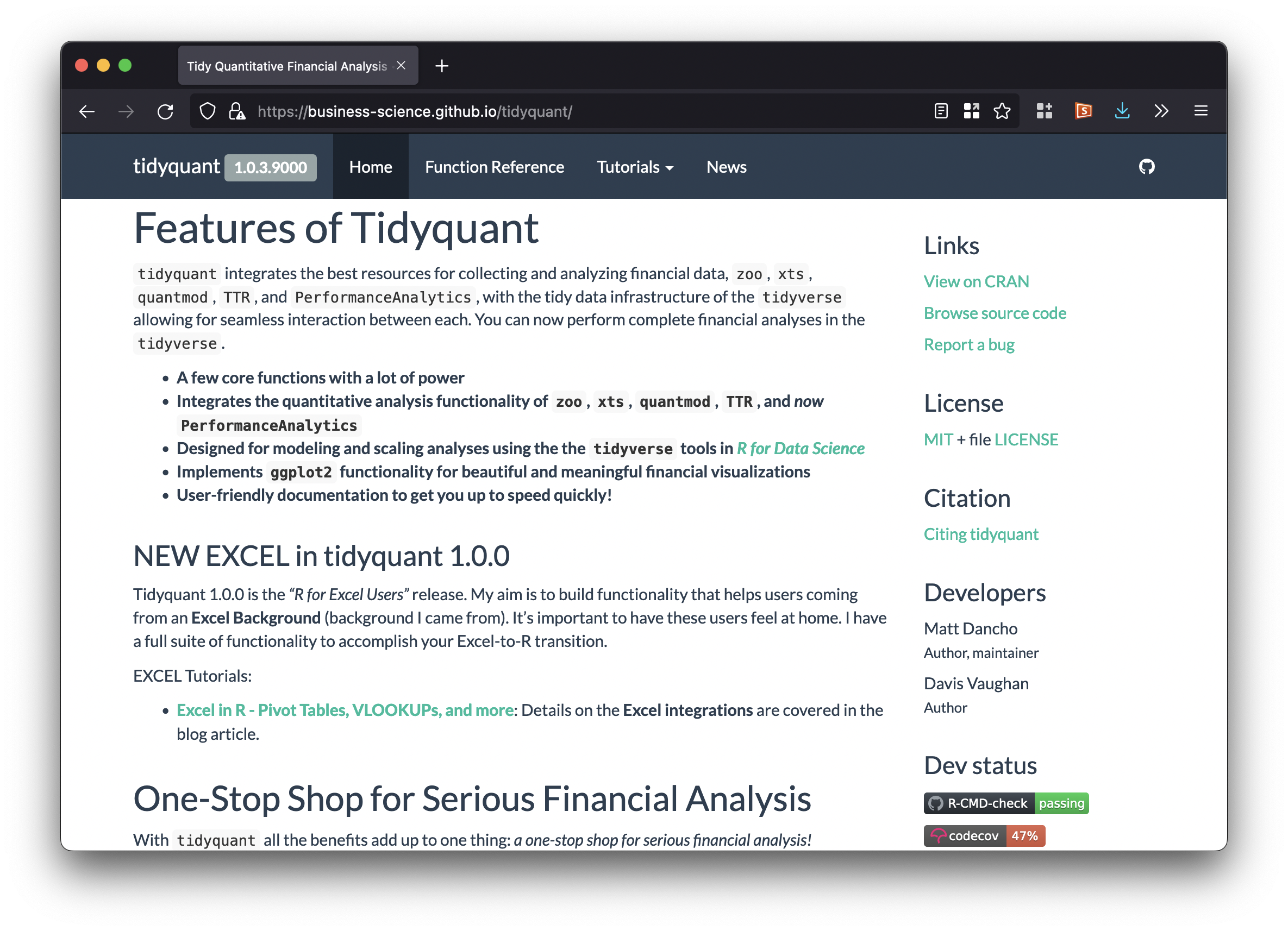

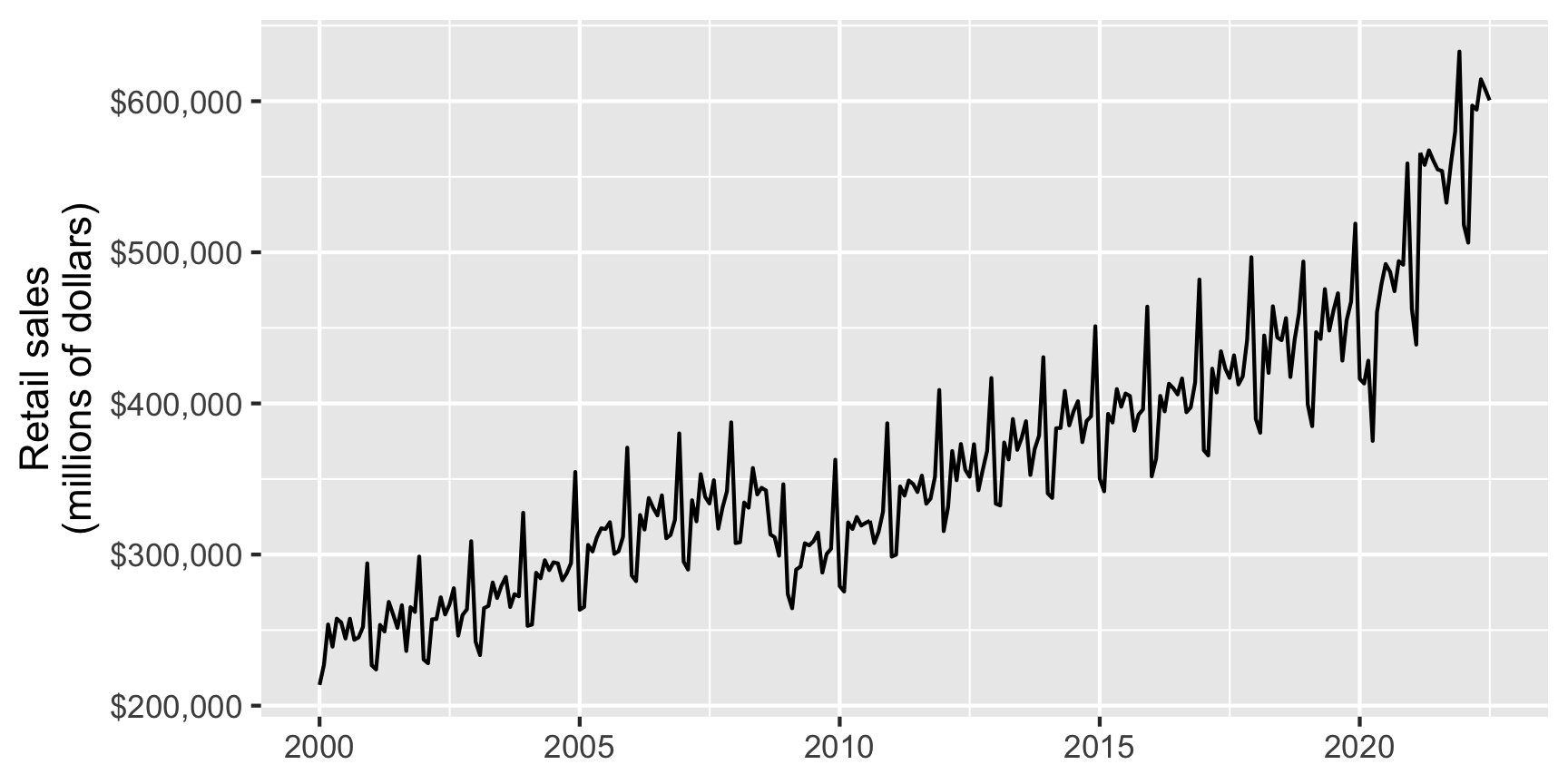

library(tidyquant)

data <- tq_get(x = c("CPILFESL", # CPI

"RSXFSN", # Advance retail sales

"USREC"), # US recessions

get = "economic.data", # Use FRED

from = "2000-01-01",

to = "2022-09-01")

head(data)## # A tibble: 6 × 3

## symbol date price

## <chr> <date> <dbl>

## 1 CPILFESL 2000-01-01 179.

## 2 CPILFESL 2000-02-01 179.

## 3 CPILFESL 2000-03-01 180

## 4 CPILFESL 2000-04-01 180.

## 5 CPILFESL 2000-05-01 181.

## 6 CPILFESL 2000-06-01 181.

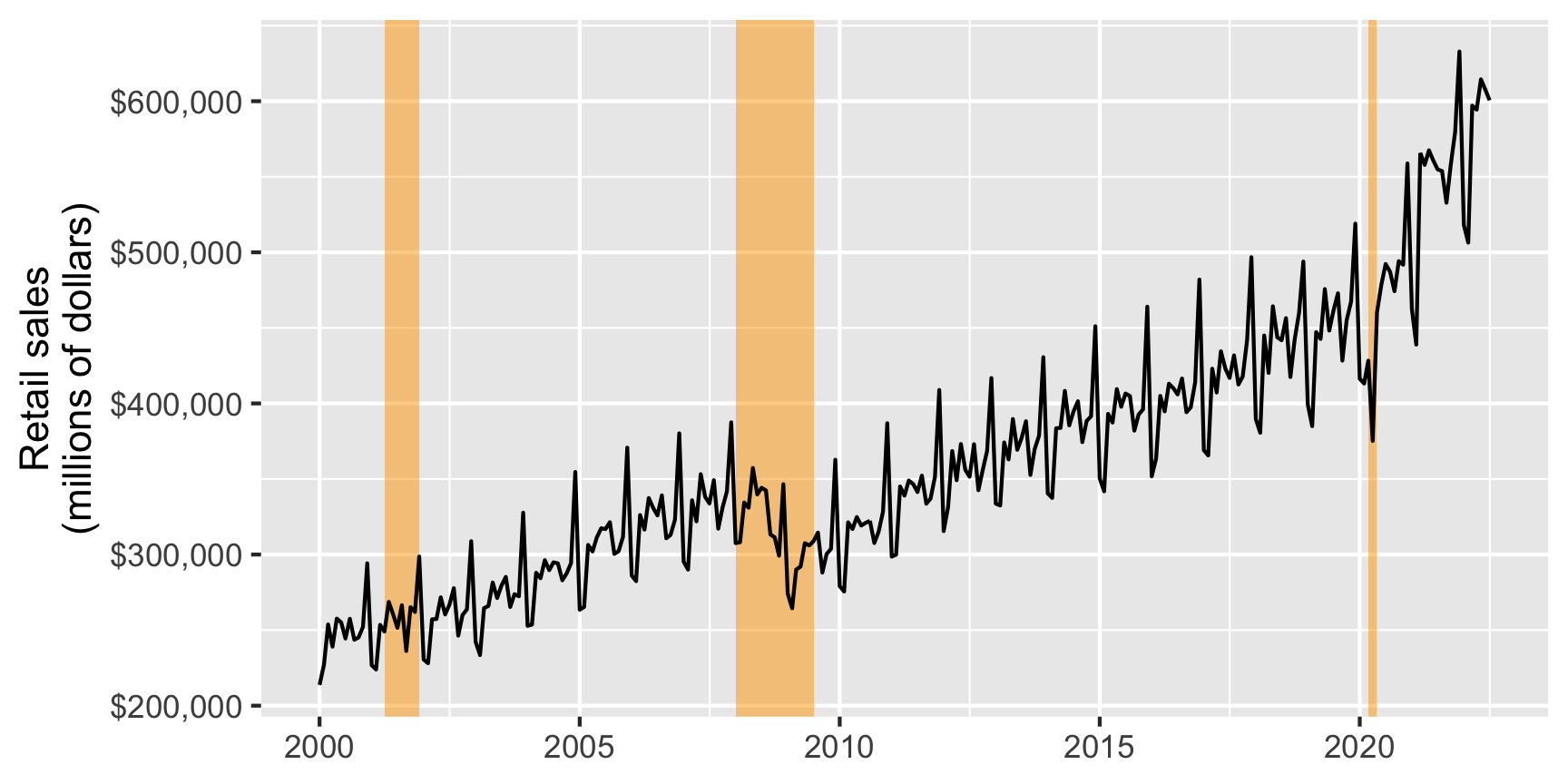

recessions_start_end <- data %>%

filter(symbol == "USREC") %>%

mutate(recession_change = price - lag(price)) %>%

filter(recession_change != 0)

recessions <- tibble(start = filter(recessions_start_end, recession_change == 1)$date,

end = filter(recessions_start_end, recession_change == -1)$date)

ggplot(retail, aes(x = date, y = price)) +

geom_rect(data = recessions,

aes(xmin = start, xmax = end, ymin = -Inf, ymax = Inf),

inherit.aes = FALSE, fill = "orange", alpha = 0.5) +

geom_line() +

scale_y_continuous(labels = scales::label_dollar()) +

labs(x = NULL, y = "Retail sales\n(millions of dollars)")

Your turn!

Accessing APIs yourself

What if there’s an API but

no pre-built package?

- You can still use the API!

- You need to write the code to access the API using the {httr} R package

Sending data to websites

GET

Data sent to server via URLs with parameters

Parameters all visible in the URL

POST

Data sent to server via invisible request

Forms with usernames and passwords

Why not just build the URL on your own?

Getting data from websites

Content types

Data can be returned as text, JSON, XML, files, etc.

JSON

XML

<wb:countries page="1" pages="1" per_page="50" total="28">

<wb:country id="AFG">

<wb:iso2Code>AF</wb:iso2Code>

<wb:name>Afghanistan</wb:name>

<wb:region id="SAS" iso2code="8S">South Asia</wb:region>

<wb:adminregion id="SAS" iso2code="8S">South Asia</wb:adminregion>

<wb:incomeLevel id="LIC" iso2code="XM">Low income</wb:incomeLevel>

<wb:lendingType id="IDX" iso2code="XI">IDA</wb:lendingType>

<wb:capitalCity>Kabul</wb:capitalCity>

<wb:longitude>69.1761</wb:longitude>

<wb:latitude>34.5228</wb:latitude>

</wb:country>

<wb:country id="BDI">

<wb:iso2Code>BI</wb:iso2Code>

<wb:name>Burundi</wb:name>

...

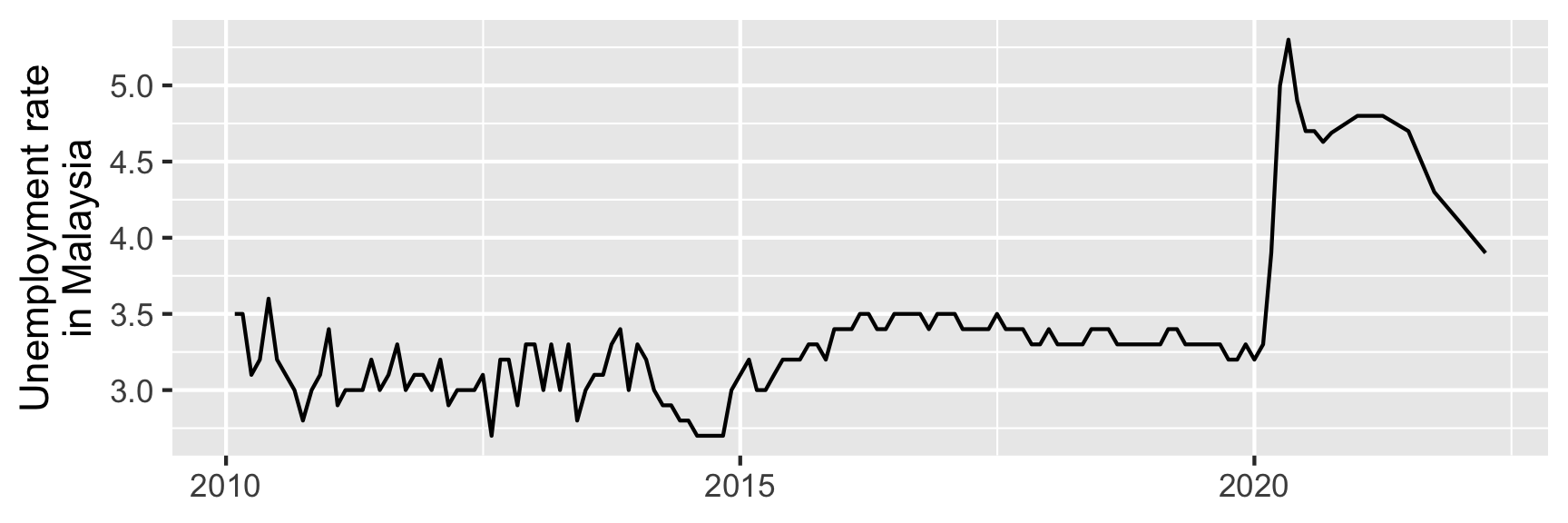

# Build the URL query

api_url <- modify_url("https://www.econdb.com/",

path = "api/series/URATEMY",

query = list(format = "json"))

# Submit the query

r <- GET(api_url)

r## Response [https://www.econdb.com/api/series/URATEMY/?format=json]

## Date: 2022-09-11 15:56

## Status: 200

## Content-Type: application/json

## Size: 3.7 kBheaders(r)

## $date

## [1] "Sun, 11 Sep 2022 15:56:19 GMT"

##

## $`content-type`

## [1] "application/json"

##

## $vary

## [1] "Accept-Encoding"

##

## $vary

## [1] "Accept, Origin"

##

## $allow

## [1] "GET, HEAD, OPTIONS"

##

## $`x-frame-options`

## [1] "DENY"

##

## $`x-content-type-options`

## [1] "nosniff"

##

## $`referrer-policy`

## [1] "same-origin"

##

## $`content-encoding`

## [1] "gzip"

##

## $`cf-cache-status`

## [1] "DYNAMIC"

##

## $`report-to`

## [1] "{\"endpoints\":[{\"url\":\"https:\\/\\/a.nel.cloudflare.com\\/report\\/v3?s=M6BI1UVSV934RbiLLRmu0N9p96i5%2FKUMWVx4WyNQbh94zk1KHGW62Z0MSwBOslB1hu2EbPitIYJaZj%2B7vnUc2A5NJsyp20j0cSWVsWkXT7HIUv25DhGEFfNQncsLxQ2%2B\"}],\"group\":\"cf-nel\",\"max_age\":604800}"

##

## $nel

## [1] "{\"success_fraction\":0,\"report_to\":\"cf-nel\",\"max_age\":604800}"

##

## $server

## [1] "cloudflare"

##

## $`cf-ray`

## [1] "749197b98efa8da6-MIA"

##

## attr(,"class")

## [1] "insensitive" "list"content(r)

## $ticker

## [1] "URATEMY"

##

## $description

## [1] "Malaysia - Unemployment"

##

## $geography

## [1] "Malaysia"

##

## $frequency

## [1] "M"

##

## $dataset

## [1] "BNM_UNEMP"

##

## $units

## [1] "% of labour force"

##

## $additional_metadata

## $additional_metadata$`2:Indicator`

## [1] "120:Unemployment "

##

## $additional_metadata$`GEO:None`

## [1] "130:None"

##

##

## $data

## $data$values

## $data$values[[1]]

## [1] 3.2

##

## $data$values[[2]]

## [1] 3.5

##

## $data$values[[3]]

## [1] 3.5

##

## $data$values[[4]]

## [1] 3.5

##

## $data$values[[5]]

## [1] 3.1

##

## $data$values[[6]]

## [1] 3.2

##

## $data$values[[7]]

## [1] 3.6

##

## $data$values[[8]]

## [1] 3.2

##

## $data$values[[9]]

## [1] 3.1

##

## $data$values[[10]]

## [1] 3

##

## $data$values[[11]]

## [1] 2.8

##

## $data$values[[12]]

## [1] 3

##

## $data$values[[13]]

## [1] 3.1

##

## $data$values[[14]]

## [1] 3.4

##

## $data$values[[15]]

## [1] 2.9

##

## $data$values[[16]]

## [1] 3

##

## $data$values[[17]]

## [1] 3

##

## $data$values[[18]]

## [1] 3

##

## $data$values[[19]]

## [1] 3.2

##

## $data$values[[20]]

## [1] 3

##

## $data$values[[21]]

## [1] 3.1

##

## $data$values[[22]]

## [1] 3.3

##

## $data$values[[23]]

## [1] 3

##

## $data$values[[24]]

## [1] 3.1

##

## $data$values[[25]]

## [1] 3.1

##

## $data$values[[26]]

## [1] 3

##

## $data$values[[27]]

## [1] 3.2

##

## $data$values[[28]]

## [1] 2.9

##

## $data$values[[29]]

## [1] 3

##

## $data$values[[30]]

## [1] 3

##

## $data$values[[31]]

## [1] 3

##

## $data$values[[32]]

## [1] 3.1

##

## $data$values[[33]]

## [1] 2.7

##

## $data$values[[34]]

## [1] 3.2

##

## $data$values[[35]]

## [1] 3.2

##

## $data$values[[36]]

## [1] 2.9

##

## $data$values[[37]]

## [1] 3.3

##

## $data$values[[38]]

## [1] 3.3

##

## $data$values[[39]]

## [1] 3

##

## $data$values[[40]]

## [1] 3.3

##

## $data$values[[41]]

## [1] 3

##

## $data$values[[42]]

## [1] 3.3

##

## $data$values[[43]]

## [1] 2.8

##

## $data$values[[44]]

## [1] 3

##

## $data$values[[45]]

## [1] 3.1

##

## $data$values[[46]]

## [1] 3.1

##

## $data$values[[47]]

## [1] 3.3

##

## $data$values[[48]]

## [1] 3.4

##

## $data$values[[49]]

## [1] 3

##

## $data$values[[50]]

## [1] 3.3

##

## $data$values[[51]]

## [1] 3.2

##

## $data$values[[52]]

## [1] 3

##

## $data$values[[53]]

## [1] 2.9

##

## $data$values[[54]]

## [1] 2.9

##

## $data$values[[55]]

## [1] 2.8

##

## $data$values[[56]]

## [1] 2.8

##

## $data$values[[57]]

## [1] 2.7

##

## $data$values[[58]]

## [1] 2.7

##

## $data$values[[59]]

## [1] 2.7

##

## $data$values[[60]]

## [1] 2.7

##

## $data$values[[61]]

## [1] 3

##

## $data$values[[62]]

## [1] 3.1

##

## $data$values[[63]]

## [1] 3.2

##

## $data$values[[64]]

## [1] 3

##

## $data$values[[65]]

## [1] 3

##

## $data$values[[66]]

## [1] 3.1

##

## $data$values[[67]]

## [1] 3.2

##

## $data$values[[68]]

## [1] 3.2

##

## $data$values[[69]]

## [1] 3.2

##

## $data$values[[70]]

## [1] 3.3

##

## $data$values[[71]]

## [1] 3.3

##

## $data$values[[72]]

## [1] 3.2

##

## $data$values[[73]]

## [1] 3.4

##

## $data$values[[74]]

## [1] 3.4

##

## $data$values[[75]]

## [1] 3.4

##

## $data$values[[76]]

## [1] 3.5

##

## $data$values[[77]]

## [1] 3.5

##

## $data$values[[78]]

## [1] 3.4

##

## $data$values[[79]]

## [1] 3.4

##

## $data$values[[80]]

## [1] 3.5

##

## $data$values[[81]]

## [1] 3.5

##

## $data$values[[82]]

## [1] 3.5

##

## $data$values[[83]]

## [1] 3.5

##

## $data$values[[84]]

## [1] 3.4

##

## $data$values[[85]]

## [1] 3.5

##

## $data$values[[86]]

## [1] 3.5

##

## $data$values[[87]]

## [1] 3.5

##

## $data$values[[88]]

## [1] 3.4

##

## $data$values[[89]]

## [1] 3.4

##

## $data$values[[90]]

## [1] 3.4

##

## $data$values[[91]]

## [1] 3.4

##

## $data$values[[92]]

## [1] 3.5

##

## $data$values[[93]]

## [1] 3.4

##

## $data$values[[94]]

## [1] 3.4

##

## $data$values[[95]]

## [1] 3.4

##

## $data$values[[96]]

## [1] 3.3

##

## $data$values[[97]]

## [1] 3.3

##

## $data$values[[98]]

## [1] 3.4

##

## $data$values[[99]]

## [1] 3.3

##

## $data$values[[100]]

## [1] 3.3

##

## $data$values[[101]]

## [1] 3.3

##

## $data$values[[102]]

## [1] 3.3

##

## $data$values[[103]]

## [1] 3.4

##

## $data$values[[104]]

## [1] 3.4

##

## $data$values[[105]]

## [1] 3.4

##

## $data$values[[106]]

## [1] 3.3

##

## $data$values[[107]]

## [1] 3.3

##

## $data$values[[108]]

## [1] 3.3

##

## $data$values[[109]]

## [1] 3.3

##

## $data$values[[110]]

## [1] 3.3

##

## $data$values[[111]]

## [1] 3.3

##

## $data$values[[112]]

## [1] 3.4

##

## $data$values[[113]]

## [1] 3.4

##

## $data$values[[114]]

## [1] 3.3

##

## $data$values[[115]]

## [1] 3.3

##

## $data$values[[116]]

## [1] 3.3

##

## $data$values[[117]]

## [1] 3.3

##

## $data$values[[118]]

## [1] 3.3

##

## $data$values[[119]]

## [1] 3.2

##

## $data$values[[120]]

## [1] 3.2

##

## $data$values[[121]]

## [1] 3.3

##

## $data$values[[122]]

## [1] 3.2

##

## $data$values[[123]]

## [1] 3.3

##

## $data$values[[124]]

## [1] 3.9

##

## $data$values[[125]]

## [1] 5

##

## $data$values[[126]]

## [1] 5.3

##

## $data$values[[127]]

## [1] 4.9

##

## $data$values[[128]]

## [1] 4.7

##

## $data$values[[129]]

## [1] 4.7

##

## $data$values[[130]]

## [1] 4.63

##

## $data$values[[131]]

## [1] 4.69

##

## $data$values[[132]]

## [1] 4.8

##

## $data$values[[133]]

## [1] 4.8

##

## $data$values[[134]]

## [1] 4.7

##

## $data$values[[135]]

## [1] 4.3

##

## $data$values[[136]]

## [1] 4.1

##

## $data$values[[137]]

## [1] 3.9

##

##

## $data$dates

## $data$dates[[1]]

## [1] "2001-10-01"

##

## $data$dates[[2]]

## [1] "2010-01-01"

##

## $data$dates[[3]]

## [1] "2010-02-01"

##

## $data$dates[[4]]

## [1] "2010-03-01"

##

## $data$dates[[5]]

## [1] "2010-04-01"

##

## $data$dates[[6]]

## [1] "2010-05-01"

##

## $data$dates[[7]]

## [1] "2010-06-01"

##

## $data$dates[[8]]

## [1] "2010-07-01"

##

## $data$dates[[9]]

## [1] "2010-08-01"

##

## $data$dates[[10]]

## [1] "2010-09-01"

##

## $data$dates[[11]]

## [1] "2010-10-01"

##

## $data$dates[[12]]

## [1] "2010-11-01"

##

## $data$dates[[13]]

## [1] "2010-12-01"

##

## $data$dates[[14]]

## [1] "2011-01-01"

##

## $data$dates[[15]]

## [1] "2011-02-01"

##

## $data$dates[[16]]

## [1] "2011-03-01"

##

## $data$dates[[17]]

## [1] "2011-04-01"

##

## $data$dates[[18]]

## [1] "2011-05-01"

##

## $data$dates[[19]]

## [1] "2011-06-01"

##

## $data$dates[[20]]

## [1] "2011-07-01"

##

## $data$dates[[21]]

## [1] "2011-08-01"

##

## $data$dates[[22]]

## [1] "2011-09-01"

##

## $data$dates[[23]]

## [1] "2011-10-01"

##

## $data$dates[[24]]

## [1] "2011-11-01"

##

## $data$dates[[25]]

## [1] "2011-12-01"

##

## $data$dates[[26]]

## [1] "2012-01-01"

##

## $data$dates[[27]]

## [1] "2012-02-01"

##

## $data$dates[[28]]

## [1] "2012-03-01"

##

## $data$dates[[29]]

## [1] "2012-04-01"

##

## $data$dates[[30]]

## [1] "2012-05-01"

##

## $data$dates[[31]]

## [1] "2012-06-01"

##

## $data$dates[[32]]

## [1] "2012-07-01"

##

## $data$dates[[33]]

## [1] "2012-08-01"

##

## $data$dates[[34]]

## [1] "2012-09-01"

##

## $data$dates[[35]]

## [1] "2012-10-01"

##

## $data$dates[[36]]

## [1] "2012-11-01"

##

## $data$dates[[37]]

## [1] "2012-12-01"

##

## $data$dates[[38]]

## [1] "2013-01-01"

##

## $data$dates[[39]]

## [1] "2013-02-01"

##

## $data$dates[[40]]

## [1] "2013-03-01"

##

## $data$dates[[41]]

## [1] "2013-04-01"

##

## $data$dates[[42]]

## [1] "2013-05-01"

##

## $data$dates[[43]]

## [1] "2013-06-01"

##

## $data$dates[[44]]

## [1] "2013-07-01"

##

## $data$dates[[45]]

## [1] "2013-08-01"

##

## $data$dates[[46]]

## [1] "2013-09-01"

##

## $data$dates[[47]]

## [1] "2013-10-01"

##

## $data$dates[[48]]

## [1] "2013-11-01"

##

## $data$dates[[49]]

## [1] "2013-12-01"

##

## $data$dates[[50]]

## [1] "2014-01-01"

##

## $data$dates[[51]]

## [1] "2014-02-01"

##

## $data$dates[[52]]

## [1] "2014-03-01"

##

## $data$dates[[53]]

## [1] "2014-04-01"

##

## $data$dates[[54]]

## [1] "2014-05-01"

##

## $data$dates[[55]]

## [1] "2014-06-01"

##

## $data$dates[[56]]

## [1] "2014-07-01"

##

## $data$dates[[57]]

## [1] "2014-08-01"

##

## $data$dates[[58]]

## [1] "2014-09-01"

##

## $data$dates[[59]]

## [1] "2014-10-01"

##

## $data$dates[[60]]

## [1] "2014-11-01"

##

## $data$dates[[61]]

## [1] "2014-12-01"

##

## $data$dates[[62]]

## [1] "2015-01-01"

##

## $data$dates[[63]]

## [1] "2015-02-01"

##

## $data$dates[[64]]

## [1] "2015-03-01"

##

## $data$dates[[65]]

## [1] "2015-04-01"

##

## $data$dates[[66]]

## [1] "2015-05-01"

##

## $data$dates[[67]]

## [1] "2015-06-01"

##

## $data$dates[[68]]

## [1] "2015-07-01"

##

## $data$dates[[69]]

## [1] "2015-08-01"

##

## $data$dates[[70]]

## [1] "2015-09-01"

##

## $data$dates[[71]]

## [1] "2015-10-01"

##

## $data$dates[[72]]

## [1] "2015-11-01"

##

## $data$dates[[73]]

## [1] "2015-12-01"

##

## $data$dates[[74]]

## [1] "2016-01-01"

##

## $data$dates[[75]]

## [1] "2016-02-01"

##

## $data$dates[[76]]

## [1] "2016-03-01"

##

## $data$dates[[77]]

## [1] "2016-04-01"

##

## $data$dates[[78]]

## [1] "2016-05-01"

##

## $data$dates[[79]]

## [1] "2016-06-01"

##

## $data$dates[[80]]

## [1] "2016-07-01"

##

## $data$dates[[81]]

## [1] "2016-08-01"

##

## $data$dates[[82]]

## [1] "2016-09-01"

##

## $data$dates[[83]]

## [1] "2016-10-01"

##

## $data$dates[[84]]

## [1] "2016-11-01"

##

## $data$dates[[85]]

## [1] "2016-12-01"

##

## $data$dates[[86]]

## [1] "2017-01-01"

##

## $data$dates[[87]]

## [1] "2017-02-01"

##

## $data$dates[[88]]

## [1] "2017-03-01"

##

## $data$dates[[89]]

## [1] "2017-04-01"

##

## $data$dates[[90]]

## [1] "2017-05-01"

##

## $data$dates[[91]]

## [1] "2017-06-01"

##

## $data$dates[[92]]

## [1] "2017-07-01"

##

## $data$dates[[93]]

## [1] "2017-08-01"

##

## $data$dates[[94]]

## [1] "2017-09-01"

##

## $data$dates[[95]]

## [1] "2017-10-01"

##

## $data$dates[[96]]

## [1] "2017-11-01"

##

## $data$dates[[97]]

## [1] "2017-12-01"

##

## $data$dates[[98]]

## [1] "2018-01-01"

##

## $data$dates[[99]]

## [1] "2018-02-01"

##

## $data$dates[[100]]

## [1] "2018-03-01"

##

## $data$dates[[101]]

## [1] "2018-04-01"

##

## $data$dates[[102]]

## [1] "2018-05-01"

##

## $data$dates[[103]]

## [1] "2018-06-01"

##

## $data$dates[[104]]

## [1] "2018-07-01"

##

## $data$dates[[105]]

## [1] "2018-08-01"

##

## $data$dates[[106]]

## [1] "2018-09-01"

##

## $data$dates[[107]]

## [1] "2018-10-01"

##

## $data$dates[[108]]

## [1] "2018-11-01"

##

## $data$dates[[109]]

## [1] "2018-12-01"

##

## $data$dates[[110]]

## [1] "2019-01-01"

##

## $data$dates[[111]]

## [1] "2019-02-01"

##

## $data$dates[[112]]

## [1] "2019-03-01"

##

## $data$dates[[113]]

## [1] "2019-04-01"

##

## $data$dates[[114]]

## [1] "2019-05-01"

##

## $data$dates[[115]]

## [1] "2019-06-01"

##

## $data$dates[[116]]

## [1] "2019-07-01"

##

## $data$dates[[117]]

## [1] "2019-08-01"

##

## $data$dates[[118]]

## [1] "2019-09-01"

##

## $data$dates[[119]]

## [1] "2019-10-01"

##

## $data$dates[[120]]

## [1] "2019-11-01"

##

## $data$dates[[121]]

## [1] "2019-12-01"

##

## $data$dates[[122]]

## [1] "2020-01-01"

##

## $data$dates[[123]]

## [1] "2020-02-01"

##

## $data$dates[[124]]

## [1] "2020-03-01"

##

## $data$dates[[125]]

## [1] "2020-04-01"

##

## $data$dates[[126]]

## [1] "2020-05-01"

##

## $data$dates[[127]]

## [1] "2020-06-01"

##

## $data$dates[[128]]

## [1] "2020-07-01"

##

## $data$dates[[129]]

## [1] "2020-08-01"

##

## $data$dates[[130]]

## [1] "2020-09-01"

##

## $data$dates[[131]]

## [1] "2020-10-01"

##

## $data$dates[[132]]

## [1] "2021-01-01"

##

## $data$dates[[133]]

## [1] "2021-04-01"

##

## $data$dates[[134]]

## [1] "2021-07-01"

##

## $data$dates[[135]]

## [1] "2021-10-01"

##

## $data$dates[[136]]

## [1] "2022-01-01"

##

## $data$dates[[137]]

## [1] "2022-04-01"

##

##

## $data$status

## $data$status[[1]]

## [1] "Final"

##

## $data$status[[2]]

## [1] "Final"

##

## $data$status[[3]]

## [1] "Final"

##

## $data$status[[4]]

## [1] "Final"

##

## $data$status[[5]]

## [1] "Final"

##

## $data$status[[6]]

## [1] "Final"

##

## $data$status[[7]]

## [1] "Final"

##

## $data$status[[8]]

## [1] "Final"

##

## $data$status[[9]]

## [1] "Final"

##

## $data$status[[10]]

## [1] "Final"

##

## $data$status[[11]]

## [1] "Final"

##

## $data$status[[12]]

## [1] "Final"

##

## $data$status[[13]]

## [1] "Final"

##

## $data$status[[14]]

## [1] "Final"

##

## $data$status[[15]]

## [1] "Final"

##

## $data$status[[16]]

## [1] "Final"

##

## $data$status[[17]]

## [1] "Final"

##

## $data$status[[18]]

## [1] "Final"

##

## $data$status[[19]]

## [1] "Final"

##

## $data$status[[20]]

## [1] "Final"

##

## $data$status[[21]]

## [1] "Final"

##

## $data$status[[22]]

## [1] "Final"

##

## $data$status[[23]]

## [1] "Final"

##

## $data$status[[24]]

## [1] "Final"

##

## $data$status[[25]]

## [1] "Final"

##

## $data$status[[26]]

## [1] "Final"

##

## $data$status[[27]]

## [1] "Final"

##

## $data$status[[28]]

## [1] "Final"

##

## $data$status[[29]]

## [1] "Final"

##

## $data$status[[30]]

## [1] "Final"

##

## $data$status[[31]]

## [1] "Final"

##

## $data$status[[32]]

## [1] "Final"

##

## $data$status[[33]]

## [1] "Final"

##

## $data$status[[34]]

## [1] "Final"

##

## $data$status[[35]]

## [1] "Final"

##

## $data$status[[36]]

## [1] "Final"

##

## $data$status[[37]]

## [1] "Final"

##

## $data$status[[38]]

## [1] "Final"

##

## $data$status[[39]]

## [1] "Final"

##

## $data$status[[40]]

## [1] "Final"

##

## $data$status[[41]]

## [1] "Final"

##

## $data$status[[42]]

## [1] "Final"

##

## $data$status[[43]]

## [1] "Final"

##

## $data$status[[44]]

## [1] "Final"

##

## $data$status[[45]]

## [1] "Final"

##

## $data$status[[46]]

## [1] "Final"

##

## $data$status[[47]]

## [1] "Final"

##

## $data$status[[48]]

## [1] "Final"

##

## $data$status[[49]]

## [1] "Final"

##

## $data$status[[50]]

## [1] "Final"

##

## $data$status[[51]]

## [1] "Final"

##

## $data$status[[52]]

## [1] "Final"

##

## $data$status[[53]]

## [1] "Final"

##

## $data$status[[54]]

## [1] "Final"

##

## $data$status[[55]]

## [1] "Final"

##

## $data$status[[56]]

## [1] "Final"

##

## $data$status[[57]]

## [1] "Final"

##

## $data$status[[58]]

## [1] "Final"

##

## $data$status[[59]]

## [1] "Final"

##

## $data$status[[60]]

## [1] "Final"

##

## $data$status[[61]]

## [1] "Final"

##

## $data$status[[62]]

## [1] "Final"

##

## $data$status[[63]]

## [1] "Final"

##

## $data$status[[64]]

## [1] "Final"

##

## $data$status[[65]]

## [1] "Final"

##

## $data$status[[66]]

## [1] "Final"

##

## $data$status[[67]]

## [1] "Final"

##

## $data$status[[68]]

## [1] "Final"

##

## $data$status[[69]]

## [1] "Final"

##

## $data$status[[70]]

## [1] "Final"

##

## $data$status[[71]]

## [1] "Final"

##

## $data$status[[72]]

## [1] "Final"

##

## $data$status[[73]]

## [1] "Final"

##

## $data$status[[74]]

## [1] "Final"

##

## $data$status[[75]]

## [1] "Final"

##

## $data$status[[76]]

## [1] "Final"

##

## $data$status[[77]]

## [1] "Final"

##

## $data$status[[78]]

## [1] "Final"

##

## $data$status[[79]]

## [1] "Final"

##

## $data$status[[80]]

## [1] "Final"

##

## $data$status[[81]]

## [1] "Final"

##

## $data$status[[82]]

## [1] "Final"

##

## $data$status[[83]]

## [1] "Final"

##

## $data$status[[84]]

## [1] "Final"

##

## $data$status[[85]]

## [1] "Final"

##

## $data$status[[86]]

## [1] "Final"

##

## $data$status[[87]]

## [1] "Final"

##

## $data$status[[88]]

## [1] "Final"

##

## $data$status[[89]]

## [1] "Final"

##

## $data$status[[90]]

## [1] "Final"

##

## $data$status[[91]]

## [1] "Final"

##

## $data$status[[92]]

## [1] "Final"

##

## $data$status[[93]]

## [1] "Final"

##

## $data$status[[94]]

## [1] "Final"

##

## $data$status[[95]]

## [1] "Final"

##

## $data$status[[96]]

## [1] "Final"

##

## $data$status[[97]]

## [1] "Final"

##

## $data$status[[98]]

## [1] "Final"

##

## $data$status[[99]]

## [1] "Final"

##

## $data$status[[100]]

## [1] "Final"

##

## $data$status[[101]]

## [1] "Final"

##

## $data$status[[102]]

## [1] "Final"

##

## $data$status[[103]]

## [1] "Final"

##

## $data$status[[104]]

## [1] "Final"

##

## $data$status[[105]]

## [1] "Final"

##

## $data$status[[106]]

## [1] "Final"

##

## $data$status[[107]]

## [1] "Final"

##

## $data$status[[108]]

## [1] "Final"

##

## $data$status[[109]]

## [1] "Final"

##

## $data$status[[110]]

## [1] "Final"

##

## $data$status[[111]]

## [1] "Final"

##

## $data$status[[112]]

## [1] "Final"

##

## $data$status[[113]]

## [1] "Final"

##

## $data$status[[114]]

## [1] "Final"

##

## $data$status[[115]]

## [1] "Final"

##

## $data$status[[116]]

## [1] "Final"

##

## $data$status[[117]]

## [1] "Final"

##

## $data$status[[118]]

## [1] "Final"

##

## $data$status[[119]]

## [1] "Final"

##

## $data$status[[120]]

## [1] "Final"

##

## $data$status[[121]]

## [1] "Final"

##

## $data$status[[122]]

## [1] "Final"

##

## $data$status[[123]]

## [1] "Final"

##

## $data$status[[124]]

## [1] "Final"

##

## $data$status[[125]]

## [1] "Final"

##

## $data$status[[126]]

## [1] "Final"

##

## $data$status[[127]]

## [1] "Final"

##

## $data$status[[128]]

## [1] "Final"

##

## $data$status[[129]]

## [1] "Final"

##

## $data$status[[130]]

## [1] "Final"

##

## $data$status[[131]]

## [1] "Final"

##

## $data$status[[132]]

## [1] "Final"

##

## $data$status[[133]]

## [1] "Final"

##

## $data$status[[134]]

## [1] "Final"

##

## $data$status[[135]]

## [1] "Final"

##

## $data$status[[136]]

## [1] "Final"

##

## $data$status[[137]]

## [1] "Final"content(r, "text")

## [1] "{\"ticker\":\"URATEMY\",\"description\":\"Malaysia - Unemployment\",\"geography\":\"Malaysia\",\"frequency\":\"M\",\"dataset\":\"BNM_UNEMP\",\"units\":\"% of labour force\",\"additional_metadata\":{\"2:Indicator\":\"120:Unemployment \",\"GEO:None\":\"130:None\"},\"data\":{\"values\":[3.2,3.5,3.5,3.5,3.1,3.2,3.6,3.2,3.1,3.0,2.8,3.0,3.1,3.4,2.9,3.0,3.0,3.0,3.2,3.0,3.1,3.3,3.0,3.1,3.1,3.0,3.2,2.9,3.0,3.0,3.0,3.1,2.7,3.2,3.2,2.9,3.3,3.3,3.0,3.3,3.0,3.3,2.8,3.0,3.1,3.1,3.3,3.4,3.0,3.3,3.2,3.0,2.9,2.9,2.8,2.8,2.7,2.7,2.7,2.7,3.0,3.1,3.2,3.0,3.0,3.1,3.2,3.2,3.2,3.3,3.3,3.2,3.4,3.4,3.4,3.5,3.5,3.4,3.4,3.5,3.5,3.5,3.5,3.4,3.5,3.5,3.5,3.4,3.4,3.4,3.4,3.5,3.4,3.4,3.4,3.3,3.3,3.4,3.3,3.3,3.3,3.3,3.4,3.4,3.4,3.3,3.3,3.3,3.3,3.3,3.3,3.4,3.4,3.3,3.3,3.3,3.3,3.3,3.2,3.2,3.3,3.2,3.3,3.9,5.0,5.3,4.9,4.7,4.7,4.629,4.689,4.8,4.8,4.7,4.3,4.1,3.9],\"dates\":[\"2001-10-01\",\"2010-01-01\",\"2010-02-01\",\"2010-03-01\",\"2010-04-01\",\"2010-05-01\",\"2010-06-01\",\"2010-07-01\",\"2010-08-01\",\"2010-09-01\",\"2010-10-01\",\"2010-11-01\",\"2010-12-01\",\"2011-01-01\",\"2011-02-01\",\"2011-03-01\",\"2011-04-01\",\"2011-05-01\",\"2011-06-01\",\"2011-07-01\",\"2011-08-01\",\"2011-09-01\",\"2011-10-01\",\"2011-11-01\",\"2011-12-01\",\"2012-01-01\",\"2012-02-01\",\"2012-03-01\",\"2012-04-01\",\"2012-05-01\",\"2012-06-01\",\"2012-07-01\",\"2012-08-01\",\"2012-09-01\",\"2012-10-01\",\"2012-11-01\",\"2012-12-01\",\"2013-01-01\",\"2013-02-01\",\"2013-03-01\",\"2013-04-01\",\"2013-05-01\",\"2013-06-01\",\"2013-07-01\",\"2013-08-01\",\"2013-09-01\",\"2013-10-01\",\"2013-11-01\",\"2013-12-01\",\"2014-01-01\",\"2014-02-01\",\"2014-03-01\",\"2014-04-01\",\"2014-05-01\",\"2014-06-01\",\"2014-07-01\",\"2014-08-01\",\"2014-09-01\",\"2014-10-01\",\"2014-11-01\",\"2014-12-01\",\"2015-01-01\",\"2015-02-01\",\"2015-03-01\",\"2015-04-01\",\"2015-05-01\",\"2015-06-01\",\"2015-07-01\",\"2015-08-01\",\"2015-09-01\",\"2015-10-01\",\"2015-11-01\",\"2015-12-01\",\"2016-01-01\",\"2016-02-01\",\"2016-03-01\",\"2016-04-01\",\"2016-05-01\",\"2016-06-01\",\"2016-07-01\",\"2016-08-01\",\"2016-09-01\",\"2016-10-01\",\"2016-11-01\",\"2016-12-01\",\"2017-01-01\",\"2017-02-01\",\"2017-03-01\",\"2017-04-01\",\"2017-05-01\",\"2017-06-01\",\"2017-07-01\",\"2017-08-01\",\"2017-09-01\",\"2017-10-01\",\"2017-11-01\",\"2017-12-01\",\"2018-01-01\",\"2018-02-01\",\"2018-03-01\",\"2018-04-01\",\"2018-05-01\",\"2018-06-01\",\"2018-07-01\",\"2018-08-01\",\"2018-09-01\",\"2018-10-01\",\"2018-11-01\",\"2018-12-01\",\"2019-01-01\",\"2019-02-01\",\"2019-03-01\",\"2019-04-01\",\"2019-05-01\",\"2019-06-01\",\"2019-07-01\",\"2019-08-01\",\"2019-09-01\",\"2019-10-01\",\"2019-11-01\",\"2019-12-01\",\"2020-01-01\",\"2020-02-01\",\"2020-03-01\",\"2020-04-01\",\"2020-05-01\",\"2020-06-01\",\"2020-07-01\",\"2020-08-01\",\"2020-09-01\",\"2020-10-01\",\"2021-01-01\",\"2021-04-01\",\"2021-07-01\",\"2021-10-01\",\"2022-01-01\",\"2022-04-01\"],\"status\":[\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\",\"Final\"]}}"## # A tibble: 137 × 3

## values dates status

## <dbl> <chr> <chr>

## 1 3.2 2001-10-01 Final

## 2 3.5 2010-01-01 Final

## 3 3.5 2010-02-01 Final

## 4 3.5 2010-03-01 Final

## 5 3.1 2010-04-01 Final

## 6 3.2 2010-05-01 Final

## 7 3.6 2010-06-01 Final

## 8 3.2 2010-07-01 Final

## 9 3.1 2010-08-01 Final

## 10 3 2010-09-01 Final

## # … with 127 more rows

Your turn!

Every API is different

- Each API will accept different arguments, use different URLs, return different variables and formats

- Read the documentation!

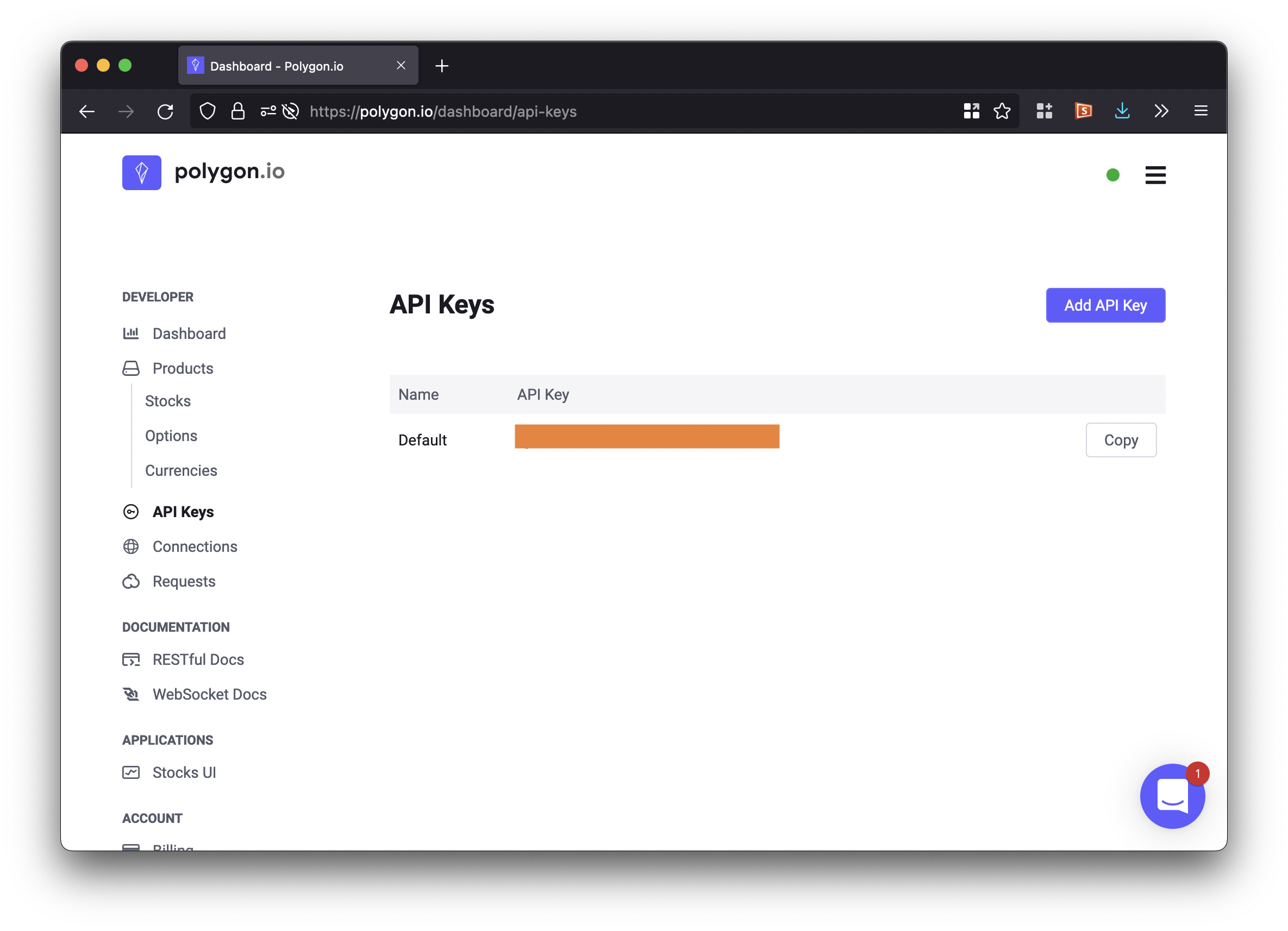

API authentication

Services will often limit your access

- Rate limiting (x API calls/hour)

- Subscription limiting (must have an account)

“Logging in” to an API

API key

A special parameter that you must include in the query

oAuth authentication

A special file called a “token” that contains the login information for the service

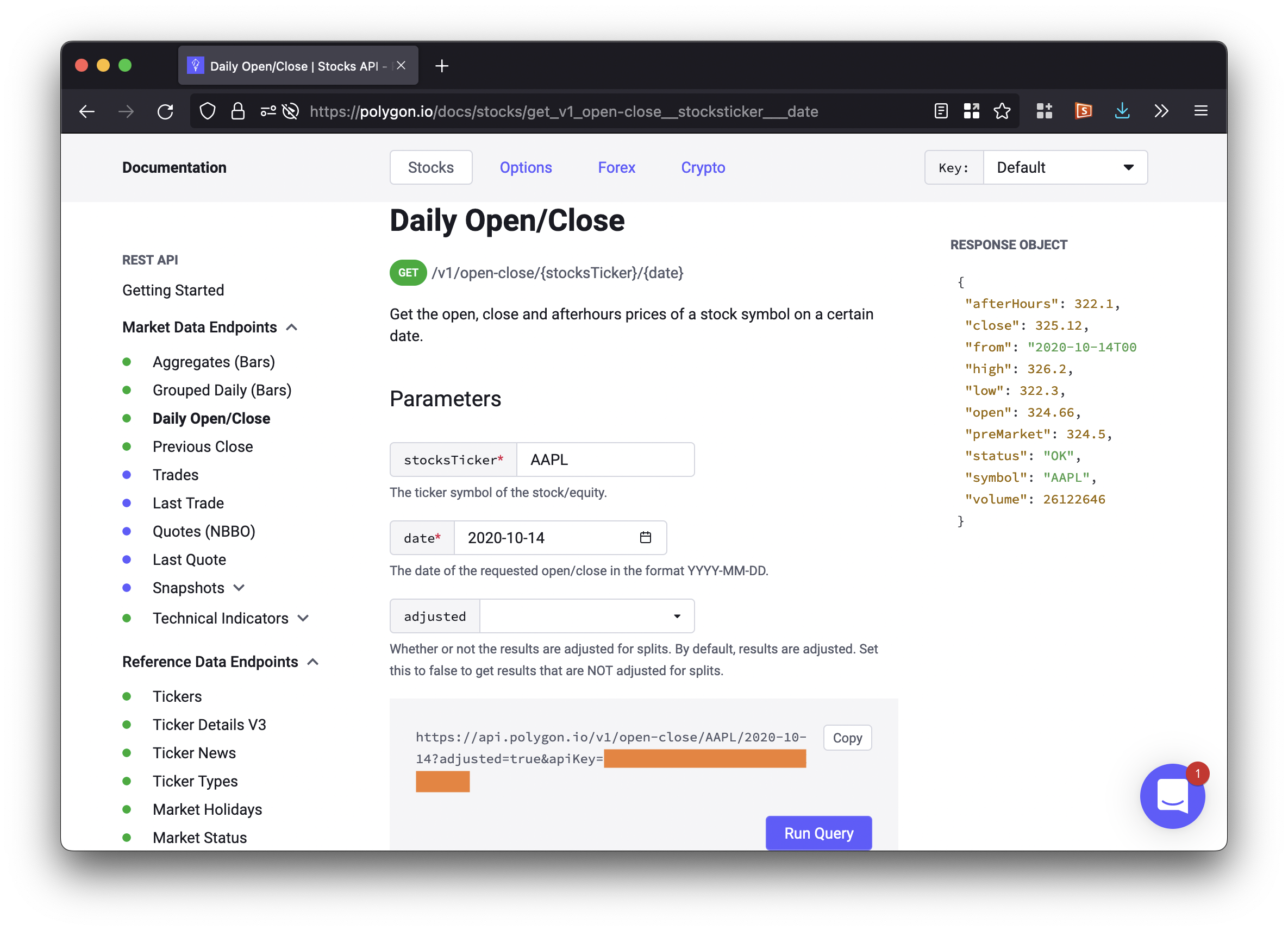

content(r, "text")

## [1] "{\"ticker\":\"AAPL\",\"queryCount\":29,\"resultsCount\":29,\"adjusted\":true,\"results\":[{\"v\":6.7778379e+07,\"vw\":162.1045,\"o\":161.01,\"c\":161.51,\"h\":163.59,\"l\":160.89,\"t\":1659326400000,\"n\":594290},{\"v\":5.9907025e+07,\"vw\":160.6921,\"o\":160.1,\"c\":160.01,\"h\":162.41,\"l\":159.63,\"t\":1659412800000,\"n\":543549},{\"v\":8.2507488e+07,\"vw\":164.9105,\"o\":160.84,\"c\":166.13,\"h\":166.59,\"l\":160.75,\"t\":1659499200000,\"n\":682781},{\"v\":5.5474144e+07,\"vw\":165.5946,\"o\":166.005,\"c\":165.81,\"h\":167.19,\"l\":164.43,\"t\":1659585600000,\"n\":525012},{\"v\":5.6696985e+07,\"vw\":164.7432,\"o\":163.21,\"c\":165.35,\"h\":165.85,\"l\":163,\"t\":1659672000000,\"n\":491310},{\"v\":6.0362338e+07,\"vw\":165.8939,\"o\":166.37,\"c\":164.87,\"h\":167.81,\"l\":164.2,\"t\":1659931200000,\"n\":540017},{\"v\":6.3075503e+07,\"vw\":164.8395,\"o\":164.02,\"c\":164.92,\"h\":165.82,\"l\":163.25,\"t\":1660017600000,\"n\":480552},{\"v\":7.017054e+07,\"vw\":168.3496,\"o\":167.68,\"c\":169.24,\"h\":169.34,\"l\":166.9,\"t\":1660104000000,\"n\":559789},{\"v\":5.7142109e+07,\"vw\":169.3737,\"o\":170.06,\"c\":168.49,\"h\":170.99,\"l\":168.19,\"t\":1660190400000,\"n\":507914},{\"v\":6.8039382e+07,\"vw\":171.0754,\"o\":169.82,\"c\":172.1,\"h\":172.17,\"l\":169.4,\"t\":1660276800000,\"n\":557624},{\"v\":5.4091694e+07,\"vw\":172.6254,\"o\":171.52,\"c\":173.19,\"h\":173.39,\"l\":171.345,\"t\":1660536000000,\"n\":501625},{\"v\":5.637705e+07,\"vw\":172.7427,\"o\":172.78,\"c\":173.03,\"h\":173.71,\"l\":171.6618,\"t\":1660622400000,\"n\":515134},{\"v\":7.9542037e+07,\"vw\":174.3135,\"o\":172.77,\"c\":174.55,\"h\":176.15,\"l\":172.57,\"t\":1660708800000,\"n\":686577},{\"v\":6.2290075e+07,\"vw\":174.1366,\"o\":173.75,\"c\":174.15,\"h\":174.9,\"l\":173.12,\"t\":1660795200000,\"n\":545655},{\"v\":7.0336995e+07,\"vw\":172.2302,\"o\":173.03,\"c\":171.52,\"h\":173.74,\"l\":171.3101,\"t\":1660881600000,\"n\":573126},{\"v\":6.8975809e+07,\"vw\":168.2677,\"o\":169.69,\"c\":167.57,\"h\":169.86,\"l\":167.135,\"t\":1661140800000,\"n\":620800},{\"v\":5.4147079e+07,\"vw\":167.6231,\"o\":167.08,\"c\":167.23,\"h\":168.71,\"l\":166.65,\"t\":1661227200000,\"n\":494686},{\"v\":5.3841524e+07,\"vw\":167.3144,\"o\":167.32,\"c\":167.53,\"h\":168.11,\"l\":166.245,\"t\":1661313600000,\"n\":476970},{\"v\":5.1218209e+07,\"vw\":169.3503,\"o\":168.78,\"c\":170.03,\"h\":170.14,\"l\":168.35,\"t\":1661400000000,\"n\":465398},{\"v\":7.896098e+07,\"vw\":165.9997,\"o\":170.57,\"c\":163.62,\"h\":171.05,\"l\":163.56,\"t\":1661486400000,\"n\":722008},{\"v\":7.3313953e+07,\"vw\":161.5291,\"o\":161.145,\"c\":161.38,\"h\":162.9,\"l\":159.82,\"t\":1661745600000,\"n\":640593},{\"v\":7.7906197e+07,\"vw\":159.2926,\"o\":162.13,\"c\":158.91,\"h\":162.56,\"l\":157.72,\"t\":1661832000000,\"n\":644671},{\"v\":8.7991091e+07,\"vw\":158.3972,\"o\":160.305,\"c\":157.22,\"h\":160.58,\"l\":157.14,\"t\":1661918400000,\"n\":606827},{\"v\":7.4229896e+07,\"vw\":156.5232,\"o\":156.64,\"c\":157.96,\"h\":158.42,\"l\":154.67,\"t\":1662004800000,\"n\":654667},{\"v\":7.6807768e+07,\"vw\":157.6597,\"o\":159.75,\"c\":155.81,\"h\":160.362,\"l\":154.965,\"t\":1662091200000,\"n\":646414},{\"v\":7.3714843e+07,\"vw\":155.012,\"o\":156.47,\"c\":154.53,\"h\":157.09,\"l\":153.69,\"t\":1662436800000,\"n\":687436},{\"v\":8.7293824e+07,\"vw\":155.306,\"o\":154.825,\"c\":155.96,\"h\":156.67,\"l\":153.61,\"t\":1662523200000,\"n\":696954},{\"v\":8.4909447e+07,\"vw\":154.3949,\"o\":154.64,\"c\":154.46,\"h\":156.36,\"l\":152.68,\"t\":1662609600000,\"n\":697264},{\"v\":6.8081006e+07,\"vw\":156.6625,\"o\":155.47,\"c\":157.37,\"h\":157.82,\"l\":154.75,\"t\":1662696000000,\"n\":532851}],\"status\":\"OK\",\"request_id\":\"bffe0b9cec51d2103b1f6cac6cd63341\",\"count\":29}"

{

"ticker": "AAPL",

"queryCount": 29,

"resultsCount": 29,

"adjusted": true,

"results": [

{

"v": 6.7778379e+07,

"vw": 162.1045,

"o": 161.01,

"c": 161.51,

"h": 163.59,

"l": 160.89,

"t": 1659326400000,

"n": 594290

},

{

"v": 5.9907025e+07,

"vw": 160.6921,

"o": 160.1,

"c": 160.01,

"h": 162.41,

"l": 159.63,

"t": 1659412800000,

"n": 543549

},

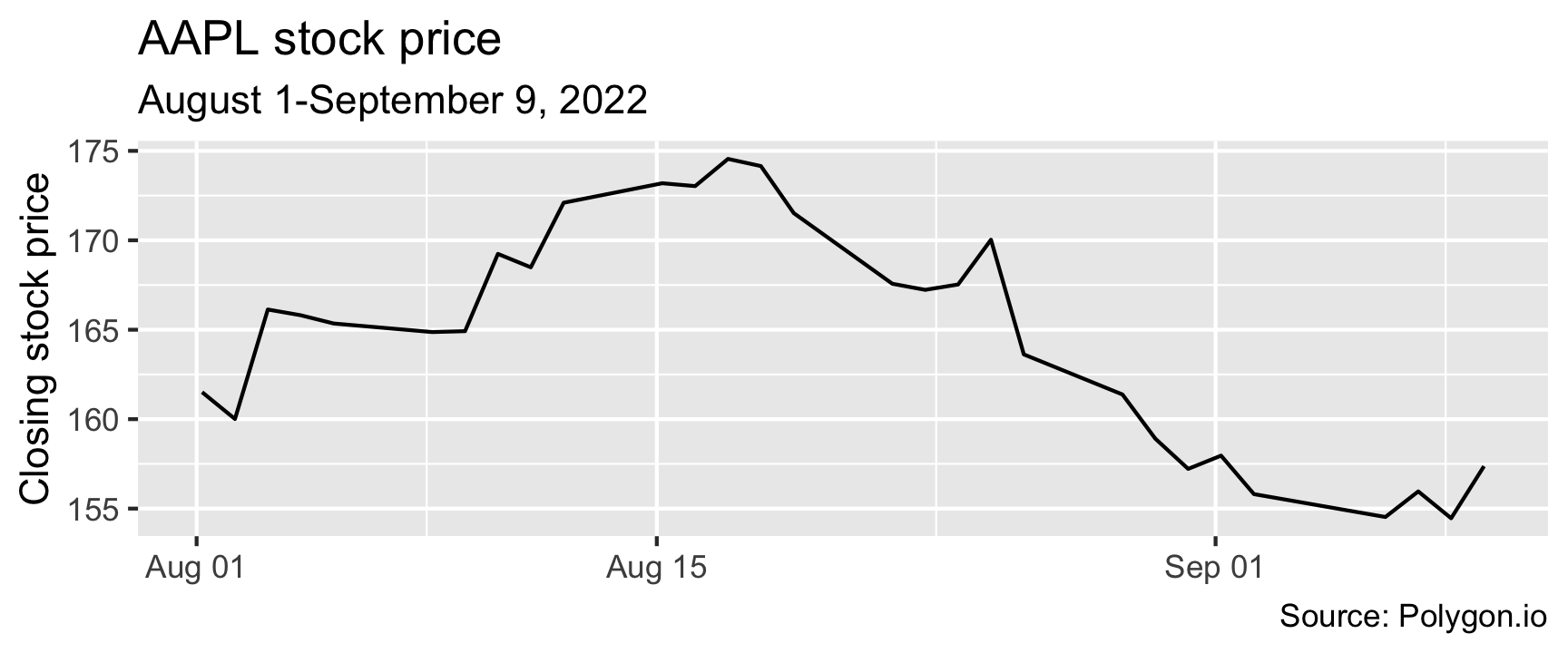

...library(ggplot2)

library(lubridate)

aapl <- data.frame(fromJSON(content(r, "text"))$results) %>%

mutate(ts = as_datetime(t / 1000))

ggplot(aapl, aes(x = ts, y = c)) +

geom_line() +

labs(x = NULL, y = "Closing stock price", title = "AAPL stock price",

subtitle = "August 1-September 9, 2022", caption = "Source: Polygon.io")

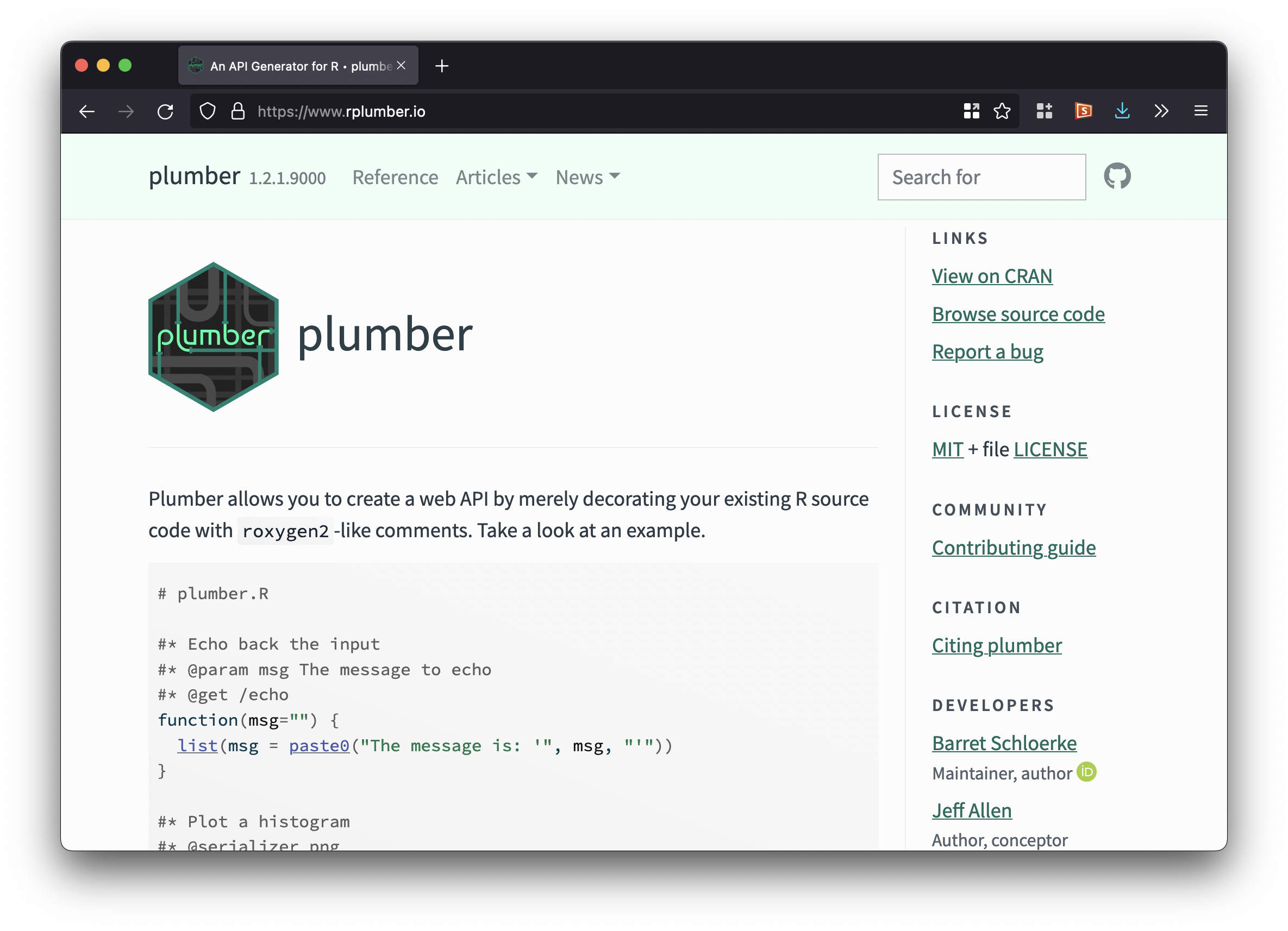

Make your own API

Scraping websites

What if there’s no API? :(

- Copy and paste

- Scrape the website

(fancy copying and pasting)

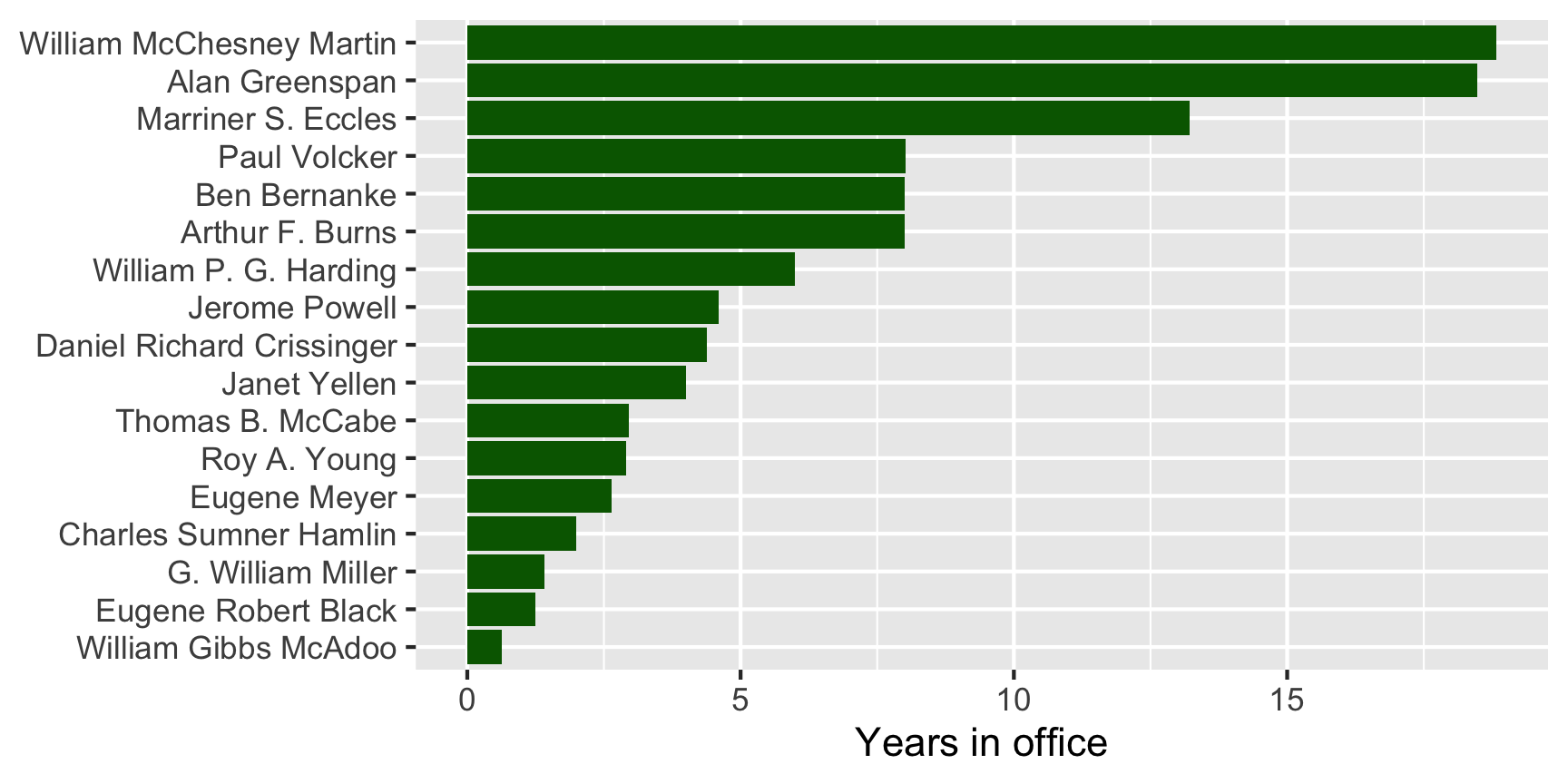

library(rvest)

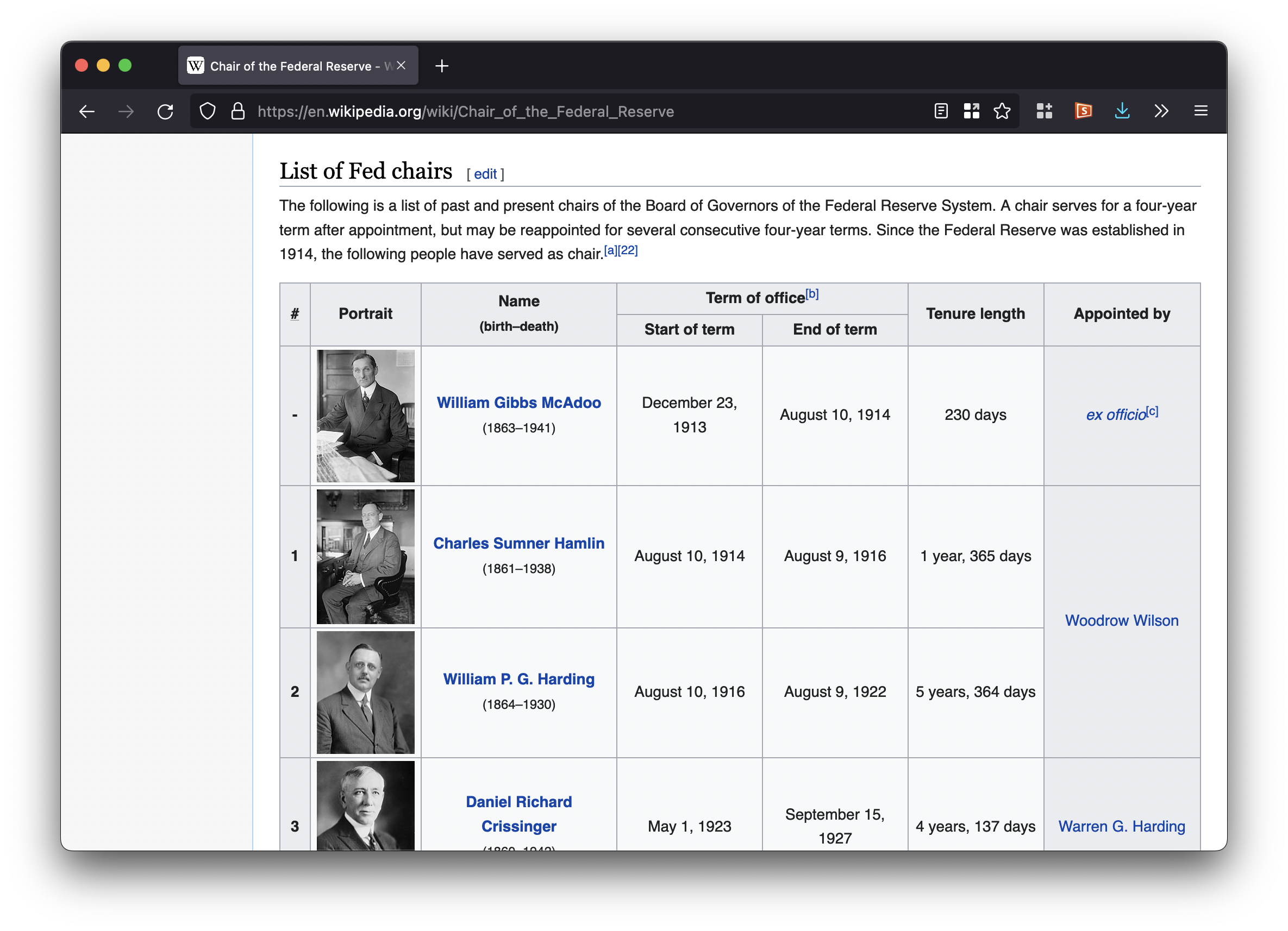

wiki_url <- "https://en.wikipedia.org/wiki/Chair_of_the_Federal_Reserve"

# Even better to use the Internet Archive since web pages change over time

wiki_url <- "https://web.archive.org/web/20220908211042/https://en.wikipedia.org/wiki/Chair_of_the_Federal_Reserve"

wiki_raw <- read_html(wiki_url)

wiki_raw## {html_document}

## <html class="client-nojs" lang="en" dir="ltr">

## [1] <head>\n<meta http-equiv="Content-Type" content="text/html; charset= ...

## [2] <body class="mediawiki ltr sitedir-ltr mw-hide-empty-elt ns-0 ns-sub ...

[[1]]

# A tibble: 18 × 7

`#` Portrait `Name(birth–death)` Term …¹ Term …² Tenur…³ Appoi…⁴

<chr> <chr> <chr> <chr> <chr> <chr> <chr>

1 # "Portrait" Name(birth–death) Start … End of… Tenure… Appoin…

2 - "" William Gibbs McAdoo(1… Decemb… August… 230 da… ex off…

3 1 "" Charles Sumner Hamlin(… August… August… 1 year… Woodro…

4 2 "" William P. G. Harding(… August… August… 5 year… Woodro…

5 3 "" Daniel Richard Crissin… May 1,… Septem… 4 year… Warren…

6 4 "" Roy A. Young(1882–1960) Octobe… August… 2 year… Calvin…

7 5 "" Eugene Meyer(1875–1959) Septem… May 10… 2 year… Herber…

8 6 "" Eugene Robert Black(18… May 19… August… 1 year… Frankl…

9 7 "" Marriner S. Eccles[d](… Novemb… Januar… 13 yea… Frankl…

10 8 "" Thomas B. McCabe(1893–… April … March … 2 year… Harry …

11 9 "" William McChesney Mart… April … Januar… 18 yea… Harry …

12 10 "" Arthur F. Burns[e](190… Februa… Januar… 7 year… Richar…

13 11 "" G. William Miller(1925… March … August… 1 year… Jimmy …

14 12 "" Paul Volcker(1927–2019) August… August… 8 year… Jimmy …

15 13 "" Alan Greenspan[f](born… August… Januar… 18 yea… Ronald…

16 14 "" Ben Bernanke(born 1953) Februa… Januar… 7 year… George…

17 15 "" Janet Yellen(born 1946) Februa… Februa… 4 year… Barack…

18 16 "" Jerome Powell[g](born … Februa… Incumb… 4 year… Donald…

# … with abbreviated variable names ¹`Term of office[b]`,

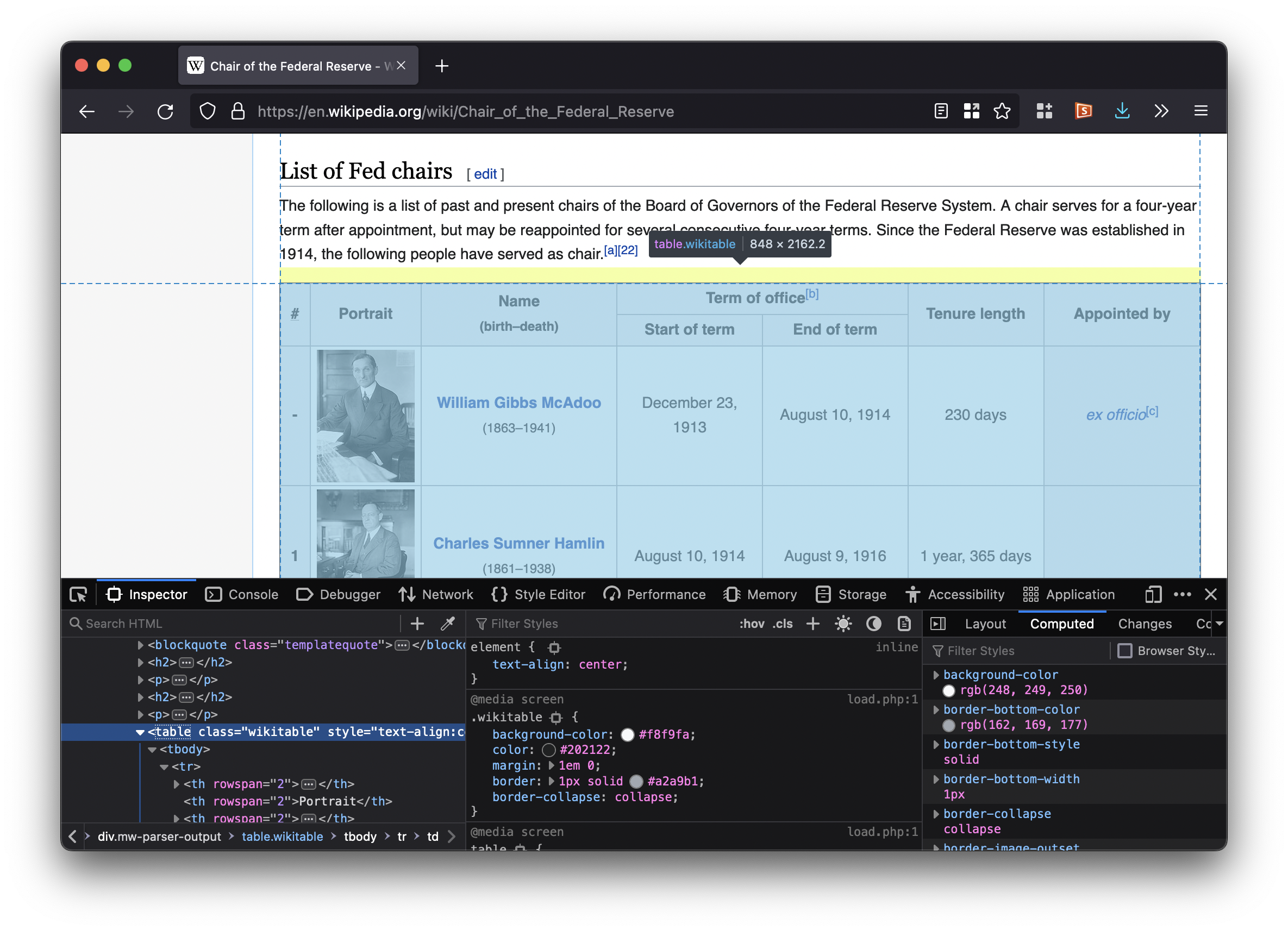

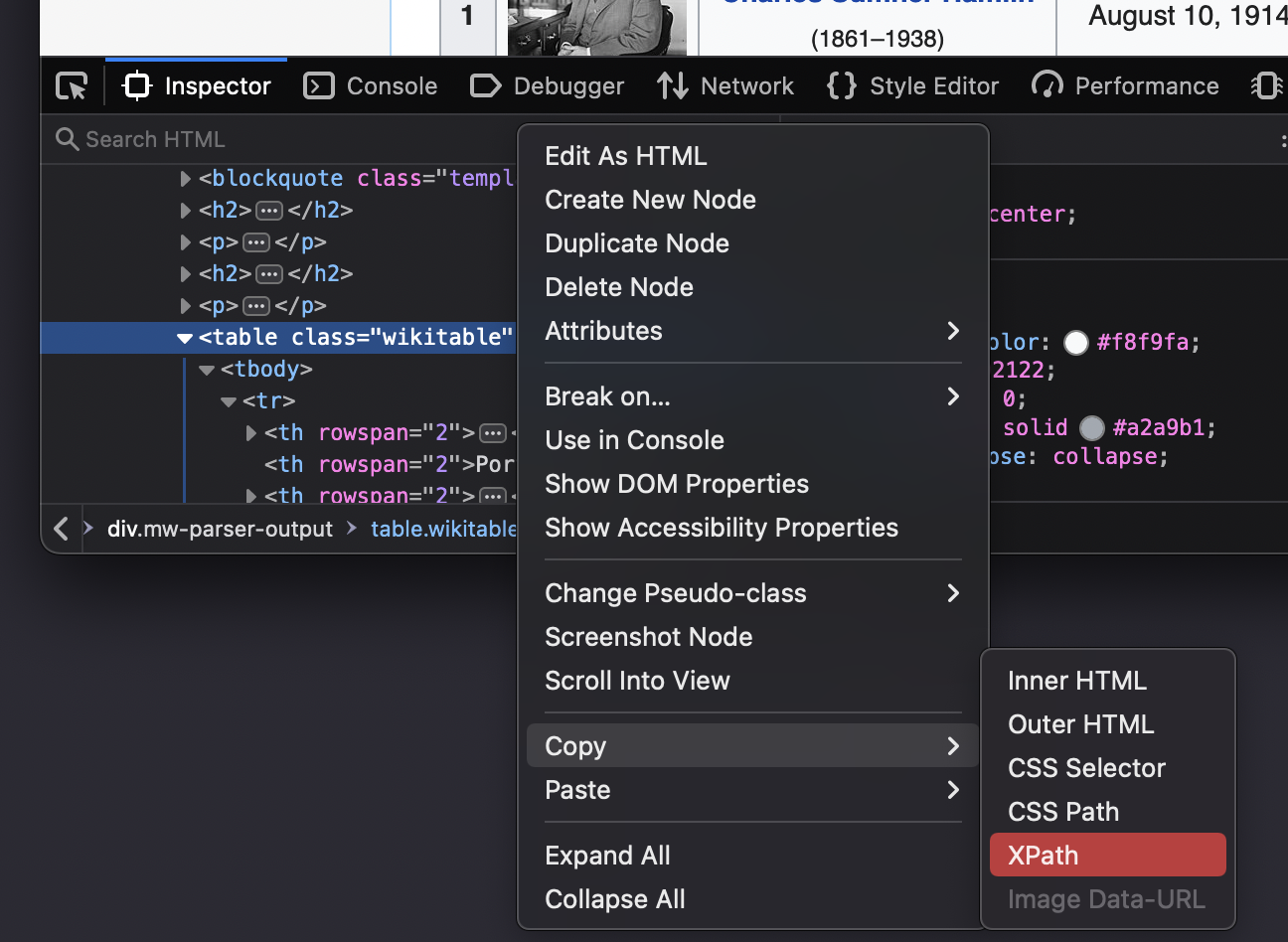

# ²`Term of office[b]`, ³`Tenure length`, ⁴`Appointed by`wiki_raw %>%

html_nodes(xpath = "/html/body/div[3]/div[3]/div[5]/div[1]/table[2]") %>%

html_table() %>%

bind_rows()# A tibble: 18 × 7

`#` Portrait `Name(birth–death)` Term …¹ Term …² Tenur…³ Appoi…⁴

<chr> <chr> <chr> <chr> <chr> <chr> <chr>

1 # "Portrait" Name(birth–death) Start … End of… Tenure… Appoin…

2 - "" William Gibbs McAdoo(1… Decemb… August… 230 da… ex off…

3 1 "" Charles Sumner Hamlin(… August… August… 1 year… Woodro…

4 2 "" William P. G. Harding(… August… August… 5 year… Woodro…

5 3 "" Daniel Richard Crissin… May 1,… Septem… 4 year… Warren…

6 4 "" Roy A. Young(1882–1960) Octobe… August… 2 year… Calvin…

7 5 "" Eugene Meyer(1875–1959) Septem… May 10… 2 year… Herber…

8 6 "" Eugene Robert Black(18… May 19… August… 1 year… Frankl…

9 7 "" Marriner S. Eccles[d](… Novemb… Januar… 13 yea… Frankl…

10 8 "" Thomas B. McCabe(1893–… April … March … 2 year… Harry …

11 9 "" William McChesney Mart… April … Januar… 18 yea… Harry …

12 10 "" Arthur F. Burns[e](190… Februa… Januar… 7 year… Richar…

13 11 "" G. William Miller(1925… March … August… 1 year… Jimmy …

14 12 "" Paul Volcker(1927–2019) August… August… 8 year… Jimmy …

15 13 "" Alan Greenspan[f](born… August… Januar… 18 yea… Ronald…

16 14 "" Ben Bernanke(born 1953) Februa… Januar… 7 year… George…

17 15 "" Janet Yellen(born 1946) Februa… Februa… 4 year… Barack…

18 16 "" Jerome Powell[g](born … Februa… Incumb… 4 year… Donald…

# … with abbreviated variable names ¹`Term of office[b]...4`,

# ²`Term of office[b]...5`, ³`Tenure length`, ⁴`Appointed by`wiki_clean <- wiki_raw %>%

html_nodes(xpath = "/html/body/div[3]/div[3]/div[5]/div[1]/table[2]") %>%

html_table() %>%

bind_rows() %>%

# Remove first row

slice(-1) %>%

# Extract name

separate(`Name(birth–death)`, into = c("Name", "birth-death"), sep = "\\(") %>%

mutate(Name = str_remove(Name, "\\[.\\]")) %>%

# Calculate duration in office

mutate(tenure_length = as.period(`Tenure length`)) %>%

mutate(seconds = as.numeric(tenure_length)) %>%

mutate(years = seconds / 60 / 60 / 24 / 365.25) %>%

# Put name in order of duration

arrange(tenure_length) %>%

mutate(Name = fct_inorder(Name))# A tibble: 17 × 3

Name `Tenure length` years

<fct> <chr> <dbl>

1 William Gibbs McAdoo 230 days 0.630

2 Eugene Robert Black 1 year, 88 days 1.24

3 G. William Miller 1 year, 151 days 1.41

4 Charles Sumner Hamlin 1 year, 365 days 2.00

5 Eugene Meyer 2 years, 236 days 2.65

6 Roy A. Young 2 years, 331 days 2.91

7 Thomas B. McCabe 2 years, 350 days 2.96

8 Janet Yellen 4 years, 0 days 4

9 Daniel Richard Crissinger 4 years, 137 days 4.38

10 Jerome Powell 4 years, 218 days 4.60

11 William P. G. Harding 5 years, 364 days 6.00

12 Arthur F. Burns 7 years, 364 days 8.00

13 Ben Bernanke 7 years, 364 days 8.00

14 Paul Volcker 8 years, 5 days 8.01

15 Marriner S. Eccles 13 years, 77 days 13.2

16 Alan Greenspan 18 years, 173 days 18.5

17 William McChesney Martin 18 years, 304 days 18.8

More complex scraping

- What if there are multiple tables, or entries, or sections, or web pages?

- Loops!

Your turn!

Summary

Real world data is a mess

- Every dataset is unique

- Every API is unique

- Every website is unique

General principles

- Try to use APIs to access data directly from data sources

- Ideally use a pre-built R package

- If not, use {httr}

- Consider making an API package (best practices)

- If there’s no API, scrape (politely) with {rvest}